I have a rather complext network, way too complex to post. After I call loss.backward(), all my gradients become 0.0, including the last one before the loss function. What could be the reason? Thank you

Without seeing the details, my guess is that the loss gradient is zero at most points. I posted an answer on Stack Overflow answering a question similar to this. Maybe you have a chunk of your math done with discrete type parts? Check out how you’re calculating loss.

@alexj

Following might be some of the reasons why the grads might become 0

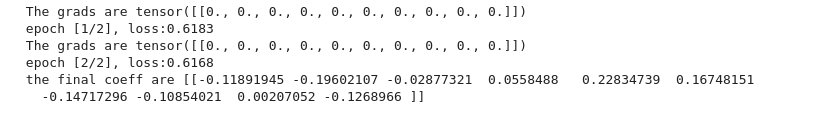

- The input tensor consists of zeros

import os

import numpy as np

import torch

import torchvision

from torch import nn

from torch.autograd import Variable

from torch.utils.data import DataLoader

from torchvision import transforms

class linearRegressionOLS(nn.Module):

def __init__(self):

super(linearRegressionOLS,self).__init__()

self.linearModel=nn.Linear(10,1)

def forward(self,x):

x = self.linearModel(x)

return x

learning_rate = 0.001

num_epochs=2

model=linearRegressionOLS()

criterion=nn.MSELoss()

optimizer=torch.optim.SGD(model.parameters(),lr=learning_rate)

X=np.zeros((100,10)).astype(np.float32)

Y=np.random.randint(2,size=(100)).reshape(100,1).astype(np.float32)

inputVal=Variable(torch.from_numpy(X))

outputVal=Variable(torch.from_numpy(Y))

for epoch in range(num_epochs):

# In a gradient descent step, the following will now be performing the gradient descent now

optimizer.zero_grad()

# We will now setup the model

dataOutput = model(inputVal)

# We will now define the loss metric

loss = criterion(dataOutput, outputVal)

# We will perform the backward propagation

loss.backward()

print(model.linearModel.weight.grad)

optimizer.step()

if epoch % 1 == 0:

print('epoch [{}/{}], loss:{:.4f}'.format(epoch + 1, num_epochs, loss))

# Final weights of the linear Regression Model

coeff=model.linearModel.weight.data.numpy()

print("the final coeff are {}".format(coeff))

- By mistake you are equating the output of the model with itself for calculating loss

loss = criterion(dataOutput, dataOutput)