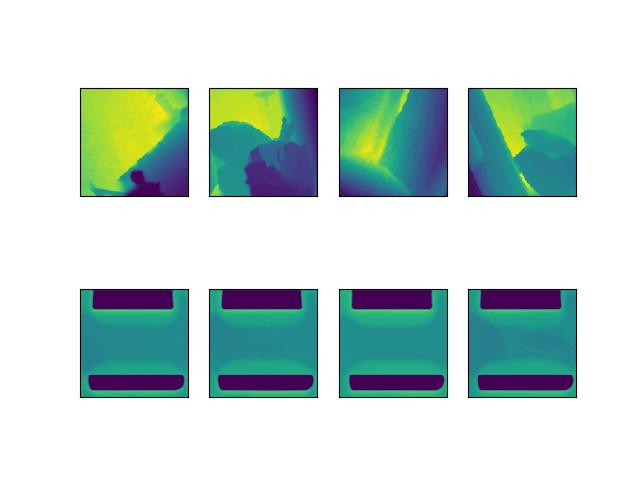

Top row is ground truth and bottom row is result. I don’t know why all of them are same. Some results where slightly different in some iteration. What should I look out for?

I had successful result from denseNet so I wanted to try resnet and skip connection methods. This is my architecture.

def weights_init(m):

# Initialize kernel weights with Gaussian distributions

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.ConvTranspose2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.in_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

def conv(in_channels, out_channels, kernel_size):

padding = (kernel_size-1) // 2

assert 2*padding == kernel_size-1, "parameters incorrect. kernel={}, padding={}".format(kernel_size, padding)

return nn.Sequential(

nn.Conv2d(in_channels,out_channels,kernel_size,stride=1,padding=padding,bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

)

def pointwise(in_channels, out_channels):

return nn.Sequential(

nn.Conv2d(in_channels,out_channels,1,1,0,bias=False),

nn.BatchNorm2d(out_channels),

nn.ReLU(inplace=True),

)

class ResNetSkipAdd(nn.Module):

def __init__(self, layers, output_size, in_channels=3, pretrained=True):

if layers not in [18, 34, 50, 101, 152]:

raise RuntimeError('Only 18, 34, 50, 101, and 152 layer model are defined for ResNet. Got {}'.format(layers))

super(ResNetSkipAdd, self).__init__()

self.output_size = output_size

pretrained_model = models.__dict__['resnet{}'.format(layers)](pretrained=pretrained)

if not pretrained:

pretrained_model.apply(weights_init)

if in_channels == 3:

self.conv1 = pretrained_model._modules['conv1']

self.bn1 = pretrained_model._modules['bn1']

else:

self.conv1 = nn.Conv2d(in_channels, 64, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = nn.BatchNorm2d(64)

weights_init(self.conv1)

weights_init(self.bn1)

self.relu = pretrained_model._modules['relu']

self.maxpool = pretrained_model._modules['maxpool']

self.layer1 = pretrained_model._modules['layer1']

self.layer2 = pretrained_model._modules['layer2']

self.layer3 = pretrained_model._modules['layer3']

self.layer4 = pretrained_model._modules['layer4']

# clear memory

del pretrained_model

# define number of intermediate channels

if layers <= 34:

num_channels = 512

elif layers >= 50:

num_channels = 2048

self.conv2 = nn.Conv2d(num_channels, 1024, 1)

weights_init(self.conv2)

kernel_size = 5

self.decode_conv1 = conv(1024, 1024, kernel_size)

self.decode_conv2 = conv(1024, 512, kernel_size)

self.decode_conv3 = conv(512, 256, kernel_size)

self.decode_conv4 = conv(256, 128, kernel_size)

self.decode_conv4b = conv(128, 64, kernel_size)

self.decode_conv5 = conv(64, 32, kernel_size)

self.decode_conv6 = pointwise(32, 1)

self.upSample = nn.Upsample(scale_factor=2, mode='nearest')

weights_init(self.decode_conv1)

weights_init(self.decode_conv2)

weights_init(self.decode_conv3)

weights_init(self.decode_conv4)

weights_init(self.decode_conv5)

weights_init(self.decode_conv6)

def forward(self, x):

# resnet

x = self.conv1(x)

x = self.bn1(x)

x1 = self.relu(x)

#print("x1", x1.size())

x2 = self.maxpool(x1)

#print("x2", x2.size())

x3 = self.layer1(x2)

#print("x3", x3.size())

x4 = self.layer2(x3)

#print("x4", x4.size())

x5 = self.layer3(x4)

#print("x5", x5.size())

x6 = self.layer4(x5)

#print("x6", x6.size())

x7 = self.conv2(x6)

#print("x7", x7.size())

# decoder

y10 = self.decode_conv1(x7)

#print("y10", y10.size())

y10 = self.upSample(y10)

y9 = F.interpolate(y10 + x5, scale_factor=2, mode='nearest')

#print("y9", y9.size())

y8 = self.decode_conv2(y9)

#print("y8", y8.size())

y7 = F.interpolate(y8 + x4, scale_factor=2, mode='nearest')

# print("y7", y7.size())

y6 = self.decode_conv3(y7)

#print("y6", y6.size())

y5 = F.interpolate(y6 + x3, scale_factor=2, mode='nearest')

#print("y5", y5.size())

y4 = self.decode_conv4(y5)

y4 = self.decode_conv4b(y4)

#print("y4", y4.size())

y3 = F.interpolate(y4 + x1, scale_factor=2, mode='nearest')

# print("y3", y3.size())

y2 = self.decode_conv5(y3)

# print("y2", y2.size())

y1 = F.interpolate(y2, scale_factor=1, mode='nearest')

# print("y1", y1.size())

y = self.decode_conv6(y1)

return y