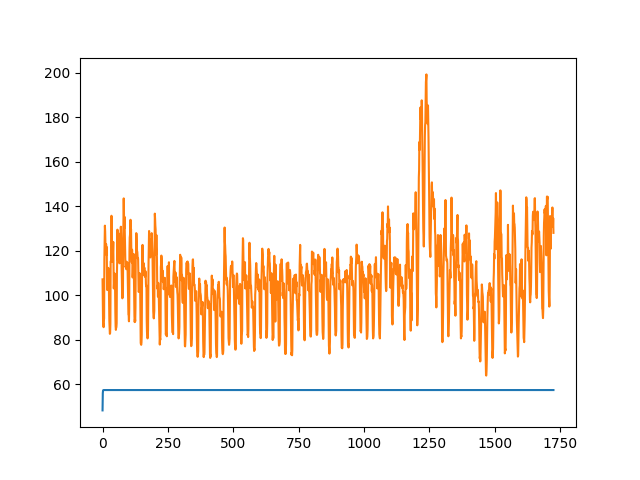

I am using a saved model for inference. When I used it to predict values, the values converge very quickly and the majority are predicted as the same value. This is even though my input dataset has significant variability. The loss decreases when training.

I am not sure why this is happening, any suggestions would be much appreciated!

Here is my code and some sample input data and output data:

model_state = torch.load(model_path)

model = LSTMModel(model_state['input_dim'], model_state['hidden_dim'],

model_state['num_layers'], model_state['output_dim'])

model.load_state_dict(model_state['state_dict'])

for parameter in model.parameters():

parameter.requires_grad = False

model.eval()

num_features = (len(data.columns)-1)

actual_data = torch.tensor(data[target_col].values).float()

prediction_data = torch.tensor(data.drop(columns=target_col).values).float()

actual_data = actual_data.view(-1, 1, 1)

prediction_data = prediction_data.view(-1, 1, num_features)

prediction = model(prediction_data)

prediction = prediction.data.numpy()

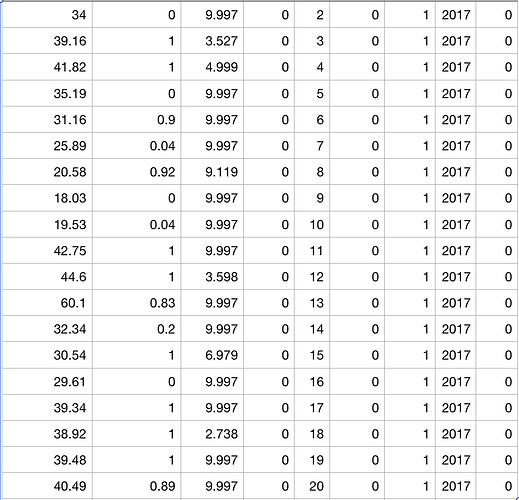

Input Data:

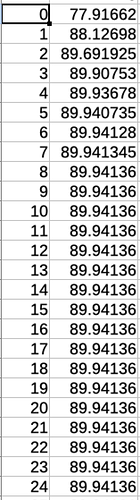

Output predictions: