In my project, I need to load video frames into memory. Through analysis, I observed that the model.forward() operation takes approximately 1 second, while loading the data consumes about 18 seconds (without multi-processing). It is evident that I require (additional) workers to independently read the data.

I have 2 RTX 4090 GPUs with a total of 70 GB memory for Distributed Data Parallel (DDP) training. The batch size is set to 128, and the dataset used is MSRVTT. The frame rate is only 1, and the maximum frames per video is 12. During my testing, I observed that setting num_worker greater than 16 for each GPU leads to some workers being killed during training due to excessive memory usage, resulting in the termination of the training process.

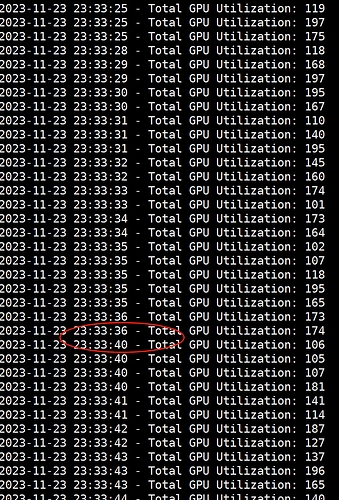

Upon monitoring the training process, I noticed that workers employ a greedy strategy for data loading, prioritizing efficiency without considering memory constraints. Theoretically, we can schedule the workers more evenly, ensuring that the batch data in memory remains within the range of (1x batch_size, 2x batch_size). This not only prevents fluctuations in CPU work caused by a sudden consumption of workers’ efforts by GPUs(4090 is very fast) but also optimizes memory utilization more reasonably. This approach aims to avoid a peak utilization scenario that could lead to worker termination, while maintaining the original greedy strategy’s GPU utilization (as the time for each step is generally fixed, theoretically allowing for a balanced throughput by evenly arranging workers)."

It’s happy to discuss about this idea.

This interval is caused by non-even executation strategy of workers. It’s true that i can get more workers, but they will cause the memory peak.

I don’t fully understand this idea as workers will already use the specified prefetch_factor which would thus limit the overall memory usage. Reduce it, if needed.

Thanks for your response. Let me put it simply: the prefetch_factor only constrains the memory usage for batches that are multiples of integer n, n equals to `num_workers’. If I’m concerned about overall memory usage, I can reduce it to 1. Then, I can set the number of workers to be equal to the time of loading the data divided by the average time for a GPU to run one step. This way, I can maintain high GPU utilization.

However, I think it would be better if we could limit the length of the entire prefetch batch queue. On top of that, we could add a small amount of redundant data batch (see the prefetch_factor = 1 and the number of workers are set like last paragraph as the non-redundant data batch) like 3~5 batches, instead of whole num_workers batches. I hope the limitation on prefetching data can shift from a discrete value to a continuous range, specified in terms of batches

Right now the data loading order should be deterministic by adding batches to the queue from each worker sequentially. If you now want to add more flexibility by limiting this queue you would still need to guarantee the data loading order as determinism is important for a lot of use cases.

Of course it’s possible, but I don’t know how much complexity this would add into the DataLoader multiprocessing backend. You could create a feature request and discuss it with the code owners on GitHub.

Right, complexity is an important aspect to consider. Thanks for your advice and discussion ![]()