Hello!

I want to deploy a pytorch model trained in quantization-aware training method and then convert the model to other inference deep learning frame works(such as MACE) to deploy on mobile devices.

I am using a model based on https://github.com/pytorch/vision/blob/master/torchvision/models/mobilenet.py

my modification is:

1: change change bias=False to bias=True

2: change out_features of classifier to 20 because I have only 20 classes.

I use torch.jit to trace my model and save it.

The traced model is available in:

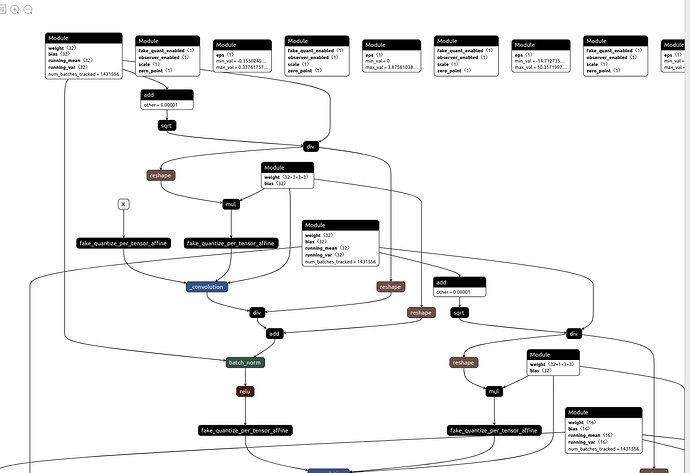

I find the graph created by jit is not optimized:

The consants are not folded so there are add, sqrt, div and mul before conv layer, the bias of conv layer is added twice, BN parameters are not folded into Conv layer.

Is there any suggestion to optimize a jit model to do these folds?

I want to deploy the model in mobile device so these folds can be useful.

Many thanks.