I want to realize this one using my own dataset, and my picture is like this ,but the image can not loaded in . GitHub - msminhas93/DeepLabv3FineTuning: Tutorial on fine tuning DeepLabv3 segmentation network for your own segmentation task in PyTorch.

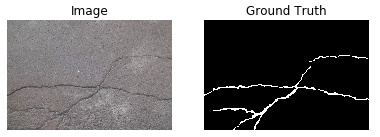

.How does the image convert into mask? is there any format?

my dataset and the annotated road crack image database that used in the model:

May the reason be that only 4 images can not be divided into the train and val dataset in the network?

Hi. So, you need to change your dataloader from that GitHub repository as below to make it work with the VOC segmentation dataset. Look for the Update in code comments.

import torch

import torchvision

from torch.utils.data import Dataset

from PIL import Image

import glob

import numpy as np

class SegmentationDataset(Dataset):

"""Segmentation Dataset"""

def __init__(self, root_dir: str, image_dir: str, mask_dir: str,

transform=None, seed: int = None, fraction: float = None,

subset: str = None, imagecolormode: str = 'rgb',

maskcolormode: str = 'rgb'):

"""

Args:

root_dir (str): dataset dir path

image_dir (str): input image dir name

mask_dir (str): mask image dir name

transform: PyTorch data transform

seed (int): random seed for reproducibility

fraction (float): dataset train/test split percentage

subset (str): subset from existing dataset

imagecolormode (str): input image color mode

maskcolormode (str): input mask color mode

"""

self.color_dict = {'rgb': 1, 'grayscale': 0}

assert (imagecolormode in ['rgb', 'grayscale'])

assert (maskcolormode in ['rgb', 'grayscale'])

self.imagecolorflag = self.color_dict[imagecolormode]

self.maskcolorflag = self.color_dict[maskcolormode]

self.root_dir = root_dir

self.transform = transform

if not fraction:

# UPDATE: Get the Segmentation Masks Before Images

self.mask_names = sorted(

glob.glob(os.path.join(self.root_dir, mask_dir, '*')))

# UPDATE: Get images with the names in the mask_names list but with updated path and '.jpg' extension

self.image_names = sorted(

os.path.join(self.root_dir, image_dir, fname.split('/')[4].split('.png')[0] + '.jpg')

for fname in self.mask_names)

else:

assert (subset in ['Train', 'Test'])

self.fraction = fraction

# UPDATE: Get the Segmentation Masks Before Images

self.mask_list = np.array(

sorted(glob.glob(os.path.join(self.root_dir, mask_dir, '*'))))

# UPDATE: Get images with the names in the mask_names list but with updated path and '.jpg' extension

self.image_list = np.array(

sorted(os.path.join(self.root_dir, image_dir, fname.split('/')[4].split('.png')[0] + '.jpg')

for fname in self.mask_list))

if seed:

np.random.seed(seed)

indices = np.arange(len(self.image_list))

np.random.shuffle(indices)

self.image_list = self.image_list[indices]

self.mask_list = self.mask_list[indices]

if subset == 'Train':

self.image_names = self.image_list[:int(

np.ceil(len(self.image_list) * (1 - self.fraction)))]

self.mask_names = self.mask_list[:int(

np.ceil(len(self.mask_list) * (1 - self.fraction)))]

else:

self.image_names = self.image_list[int(

np.ceil(len(self.image_list) * (1 - self.fraction))):]

self.mask_names = self.mask_list[int(

np.ceil(len(self.mask_list) * (1 - self.fraction))):]

def __getitem__(self, idx):

"""

Args:

idx (int): index of input image

Returns:

dict: image and mask image

"""

img_name = self.image_names[idx]

mask_name = self.mask_names[idx]

image = Image.open(img_name)

mask = Image.open(mask_name)

sample = {'image': image, 'mask': mask}

if self.transform:

sample = self.transform(sample)

return sample

def __len__(self):

"""

Returns: length of dataset

"""

return len(self.image_names)

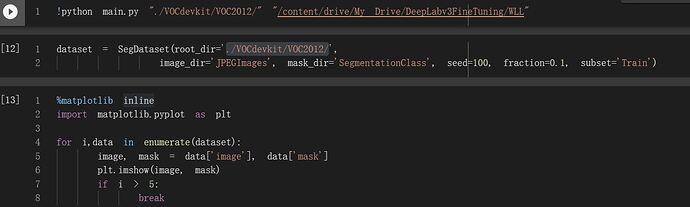

Now you can call the above dataset class as follows:

dataset = SegmentationDataset(root_dir='./VOCdevkit/VOC2012/', image_dir='JPEGImages', mask_dir='SegmentationClass', seed=100, fraction=0.1, subset='Train')

and everything should work. You can test the image/mask pairs using the following code:

import matplotlib.pyplot as plt

for i,data in enumerate(dataset):

image, mask = data['image'], data['mask']

show_image_mask(image, mask)

if i > 5:

break

Hope this helps

I think the image has loaded in but the train and test not has been spited out, since the train not begin by running the command and nothing logged in log.csv. Can you give me some guidance?

It seems that if I change imageFolder to image_dir in SegDataset it gives me TypeError of init (), but SegDataset can not works well alone if I define imageFolder, I am confusing…