I was trying to do text matching task which needs to construct an interaction grid S , each element S_ij is a cossim(x_i, y_j) that is S_{ij} = cossim(x_i, y_j).

The x, y are extracted embeddings with:

x.size() = (BatchSize, xLen, emb_dim),

y.size() = (BatchSize, yLen, emb_dim).

To do Batch cossim to obtain a S whose size is (BS, xLen, yLen) (S_{ij}= cossim(x_i, y_j)), I wrote

def cossim(X, Y):

""" calculate the cos similarity between X and Y: cos(X, Y)

X: tensor (BS, x_len, hidden_size)

Y: tensor (BS, y_len, hidden_size)

returns: (BS, x_len, y_len)

"""

X_norm = torch.sqrt(torch.sum(X ** 2, dim=2)).unsqueeze(2) # (BS, x_len, 1)

Y_norm = torch.sqrt(torch.sum(Y ** 2, dim=2)).unsqueeze(1) # (BS, 1, y_len)

S = torch.bmm(X, Y.transpose(1,2)) / (X_norm * Y_norm + 1e-5)

return S

I know that cossim at (0, 0) is not differentiable, so I initialized the padding embedding to be a random vector (non zero)

In the input x, and y (x, y are packed into Variable) ,the last several words are paddings so I must apply mask to filter the padding vector out by:

x_mask = torch.ne(x, 0).unsqueeze(2).float() # since x is packed as Variable, x_mask is also a Variable

y_mask = torch.ne(y, 0).unsqueeze(2).float()

x = x * x_mask

y = y * y_mask

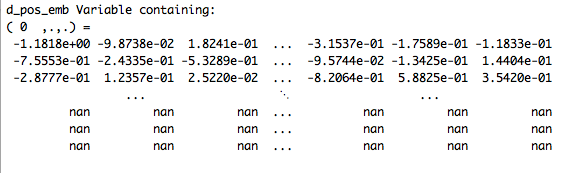

After one back prop the x, y will have some part as NaN

If I don’t apply mask, there will be no NaN problem:

x = ...

y = ...

I detached the x_mask and y_mask by 2 methods to make it as non-learnable parameter so that they won’t mess up the gradients by:

(1)x, y are variables, extract x.data, y.data then generate mask, then pack mask into variable and apply mask

x_mask = torch.ne(x.data, 0).unsqueeze(2).float() # since x is packed as Variable, x_mask is also a Variable

y_mask = torch.ne(y.data, 0).unsqueeze(2).float()

x_mask = Variable(x_mask, requires_grad=False)

y_mask = Variable(y_mask, requires_grad=False)

x = x * x_mask

y = y * y_mask

(2)x, y are variables, use x_mask = x_mask.detach()

x_mask = torch.ne(x, 0).unsqueeze(2).float() # since x is packed as Variable, x_mask is also a Variable

y_mask = torch.ne(y, 0).unsqueeze(2).float()

x_mask = x_mask.detach()

y_mask = x_mask.detach()

x = x * x_mask

y = y * y_mask

But no use, they both returned NaN in x, and y after one backprop

When I removed the multiplication of the mask, everything is ok.

Is there a problem of Cossim or the mask? How can I achieve this?

Thanks