I am trying to applying following transformations to training image and bounding boxes

t = v2.Compose(

[

v2.ToImage(),

v2.RandomHorizontalFlip(),

v2.RandomVerticalFlip(),

v2. Resize((448, 448)),

v2.ToDtype(torch.float32, scale=True),

v2.SanitizeBoundingBoxes()

]

)

# Apply transformations

if self.transforms:

# The coordinates are relative to 1,

# scale them according to the image height and width

# As this is required by the transforms

print(box_coords)

box_coords[:, [0, 2]] *= img_w

box_coords[:, [1, 3]] *= img_h

print(box_coords)

img, box_coords = self.transforms(img, box_coords)

print(box_coords)

# Change the coordinates back to relative to 1 value

box_coords[:, [0, 2]] /= img_w

box_coords[:, [1, 3]] /= img_h

print(box_coords)

But I am not getting correct bounding boxes after the transformation.

Here is the output of those print statements that I have used for debugging.

BoundingBoxes([[0.4040, 0.4230, 0.3707, 0.1140],

[0.3907, 0.4840, 0.2320, 0.1760],

[0.2293, 0.5170, 0.3573, 0.2660],

[0.6773, 0.6340, 0.3147, 0.4520]], format=BoundingBoxFormat.CXCYWH, canvas_size=(500, 375))

BoundingBoxes([[151.5000, 211.5000, 139.0000, 57.0000],

[146.5000, 242.0000, 87.0000, 88.0000],

[ 86.0000, 258.5000, 134.0000, 133.0000],

[254.0000, 317.0000, 118.0000, 226.0000]], format=BoundingBoxFormat.CXCYWH, canvas_size=(500, 375))

BoundingBoxes([[180.9920, 189.5040, 166.0587, 51.0720],

[175.0187, 216.8320, 103.9360, 78.8480],

[102.7413, 231.6160, 160.0853, 119.1680],

[303.4453, 284.0320, 140.9707, 202.4960]], format=BoundingBoxFormat.CXCYWH, canvas_size=(448, 448))

BoundingBoxes([[0.4826, 0.3790, 0.4428, 0.1021],

[0.4667, 0.4337, 0.2772, 0.1577],

[0.2740, 0.4632, 0.4269, 0.2383],

[0.8092, 0.5681, 0.3759, 0.4050]], format=BoundingBoxFormat.CXCYWH, canvas_size=(448, 448))

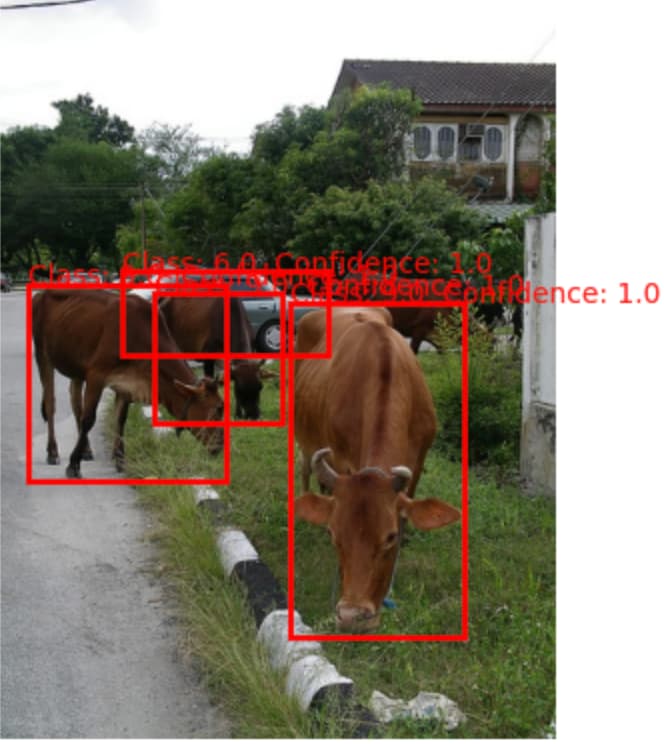

Original image with the bounding boxes:

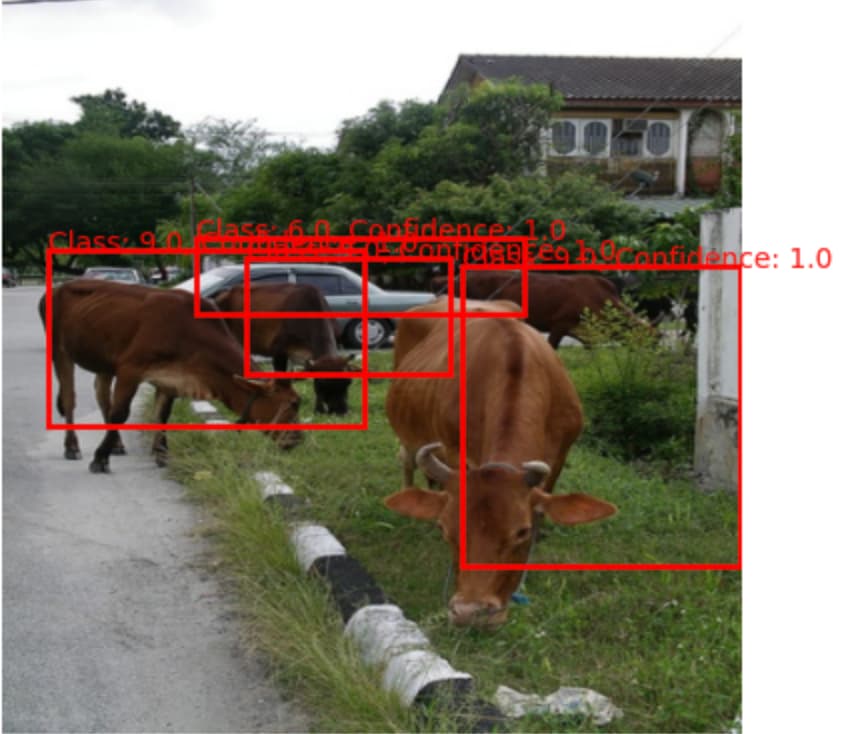

Output after transformation: