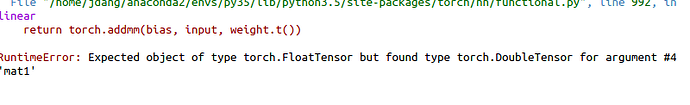

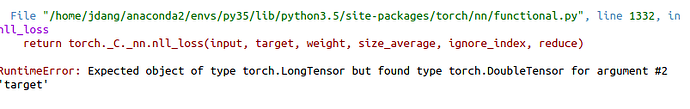

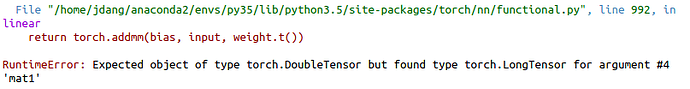

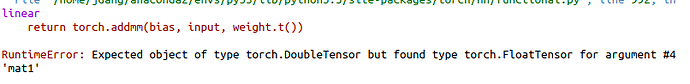

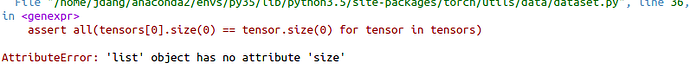

Hello, I am new in using pytorch to build NN. Recently, I am trying to formalize my data using data_utils.TensorDataset. However, this following error was appeared. I do wrap the data with tensor in my code and put it into the Variable. Unfortunately, it didn’t work at all. Are you familiar with this problem? I really appreciate for yr comment. Here is the code and error information.

y_vals_Valence = np.array([0 if each==‘Neg’ else 1 if each ==‘Neu’ else 2 for each in data[‘all_data’][:,320]])

y_vals_Arousal = np.array([3 if each==‘Pas’ else 4 if each ==‘Neu’ else 5 for each in data[‘all_data’][:,321]])

DEAP_x_train = x_vals[:-256] #using 80% of whole data for training

DEAP_x_train_torch = DEAP_x_train.astype(float)

DEAP_x_train_torch = torch.from_numpy(DEAP_x_train_torch).double()

DEAP_x_test = x_vals[-256:] #using 20% of whole data for testing

DEAP_x_test_torch = DEAP_x_test.astype(float)

DEAP_x_test_torch = torch.from_numpy(DEAP_x_test_torch).double()

DEAP_y_train = y_vals_Valence[:-256]##Valence

DEAP_y_train_torch = DEAP_y_train.astype(float)

DEAP_y_train_torch = torch.from_numpy(DEAP_y_train_torch).double()

DEAP_y_test = y_vals_Valence[-256:]

DEAP_y_test_torch = DEAP_y_test.astype(float)

DEAP_y_test_torch = torch.from_numpy(DEAP_y_test_torch).double()

DEAP_y_train_A = y_vals_Arousal[:-256] ### Arousal

DEAP_y_train_torch_A = DEAP_y_train_A.astype(float)

DEAP_y_train_torch_A = torch.from_numpy(DEAP_y_train_torch_A).double()

DEAP_y_test_A = y_vals_Arousal[-256:]

DEAP_y_test_torch_A = DEAP_y_test_A.astype(float)

DEAP_y_test_torch_A = torch.from_numpy(DEAP_y_test_torch_A).double()

batch_size =50;

Data Loader (Input Pipeline)

train = data_utils.TensorDataset(DEAP_x_train_torch, [DEAP_y_train_torch]) ##()

train_loader = data_utils.DataLoader(train, batch_size=batch_size, shuffle=True)

test = data_utils.TensorDataset(DEAP_x_test_torch, [DEAP_y_test_torch])

test_loader = data_utils.DataLoader(test, batch_size=batch_size, shuffle=False)