Hi, all

Recently, I changed the cpu and motherboard of my PC. But when I tried to run the training code, I encountered this problem. I haven’t changed any codes on my scripts. So I’m wondering whether it’s caused by the CPU(Ryzen 7 3800xt)

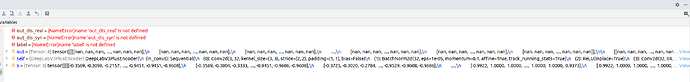

The “Traceback (most recent call last):” showed below changes everytime, sometime it happens on Conv, and sometime Batchnorm. But the assertion error happens everytime.

I have tried to fix it by myself for several days. But I cannot find much information about this error. Thus, if anyone can help, I would really appreciate it.

/opt/conda/conda-bld/pytorch_1607370193460/work/aten/src/ATen/native/cuda/Loss.cu:102: operator(): block: [0,0,0], thread: [0,0,0] Assertion input_val >= zero && input_val <= one failed.

/opt/conda/conda-bld/pytorch_1607370193460/work/aten/src/ATen/native/cuda/Loss.cu:102: operator(): block: [0,0,0], thread: [1,0,0] Assertion input_val >= zero && input_val <= one failed.

/opt/conda/conda-bld/pytorch_1607370193460/work/aten/src/ATen/native/cuda/Loss.cu:102: operator(): block: [0,0,0], thread: [2,0,0] Assertion input_val >= zero && input_val <= one failed.

/opt/conda/conda-bld/pytorch_1607370193460/work/aten/src/ATen/native/cuda/Loss.cu:102: operator(): block: [0,0,0], thread: [3,0,0] Assertion input_val >= zero && input_val <= one failed.

/opt/conda/conda-bld/pytorch_1607370193460/work/aten/src/ATen/native/cuda/Loss.cu:102: operator(): block: [0,0,0], thread: [4,0,0] Assertion input_val >= zero && input_val <= one failed.

/opt/conda/conda-bld/pytorch_1607370193460/work/aten/src/ATen/native/cuda/Loss.cu:102: operator(): block: [0,0,0], thread: [5,0,0] Assertion input_val >= zero && input_val <= one failed.

/opt/conda/conda-bld/pytorch_1607370193460/work/aten/src/ATen/native/cuda/Loss.cu:102: operator(): block: [0,0,0], thread: [6,0,0] Assertion input_val >= zero && input_val <= one failed.

/opt/conda/conda-bld/pytorch_1607370193460/work/aten/src/ATen/native/cuda/Loss.cu:102: operator(): block: [0,0,0], thread: [7,0,0] Assertion input_val >= zero && input_val <= one failed.

Traceback (most recent call last):

File “/home/jhyan/Scripts/PYTHON_PROJECT/FPN/train.py”, line 52, in

main()

File “/home/jhyan/Scripts/PYTHON_PROJECT/FPN/train.py”, line 36, in main

operate.train()

File “/home/jhyan/Scripts/PYTHON_PROJECT/FPN/operate.py”, line 90, in train

self.opt_encoder.zero_grad()

File “/home/jhyan/anaconda3/envs/domian-ada/lib/python3.6/site-packages/torch/optim/optimizer.py”, line 192, in zero_grad

p.grad.zero_()

)

)