Hello All,

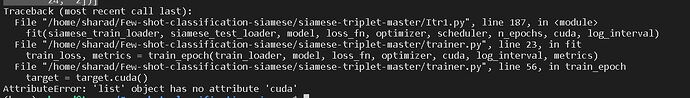

I am getting an above error when I am calling

fit(siamese_train_loader, siamese_test_loader, model, loss_fn, optimizer, scheduler, n_epochs, cuda, log_interval)

for

for batch_idx, (data, target) in enumerate(train_loader):

target = target if len(target) > 0 else None

if not type(data) in (tuple, list):

data = (data,)

if cuda:

data = tuple(d.cuda() for d in data)

if target is not None:

print(target)

target = target.cuda()

output of print list is as follows:

[tensor([[[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.1943, -0.4279],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2509, 0.0435],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.2016, -0.2016, -0.0885],

[ 0.2697, 0.2697, 0.2697, ..., 0.2320, 0.2132, 0.2320],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.1943, -0.4279],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2509, 0.0435],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.2016, -0.2016, -0.0885],

[ 0.2697, 0.2697, 0.2697, ..., 0.2320, 0.2132, 0.2320],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.1943, -0.4279],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2509, 0.0435],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.2016, -0.2016, -0.0885],

[ 0.2697, 0.2697, 0.2697, ..., 0.2320, 0.2132, 0.2320],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]]],

[[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]]],

[[[ 0.2697, 0.2697, 0.2697, ..., -0.2393, -0.3148, -0.2959],

[ 0.2697, 0.2697, 0.2697, ..., 0.1754, 0.1377, 0.1566],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.2582, -0.3713, -0.3336],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., -0.2393, -0.3148, -0.2959],

[ 0.2697, 0.2697, 0.2697, ..., 0.1754, 0.1377, 0.1566],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.2582, -0.3713, -0.3336],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., -0.2393, -0.3148, -0.2959],

[ 0.2697, 0.2697, 0.2697, ..., 0.1754, 0.1377, 0.1566],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.2582, -0.3713, -0.3336],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]]],

...,

[[[ 0.0058, -0.0885, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[-0.3148, -0.5410, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.1639, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., -0.3148, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., -0.8615, 0.2132, 0.2697]],

[[ 0.0058, -0.0885, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[-0.3148, -0.5410, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.1639, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., -0.3148, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., -0.8615, 0.2132, 0.2697]],

[[ 0.0058, -0.0885, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[-0.3148, -0.5410, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., -0.1639, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., -0.3148, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., -0.8615, 0.2132, 0.2697]]],

[[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]]],

[[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]],

[[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

...,

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697],

[ 0.2697, 0.2697, 0.2697, ..., 0.2697, 0.2697, 0.2697]]]]),

tensor([24, 23, 12, 21, 7, 3, 24, 3, 14, 4, 10, 3, 11, 9, 15, 20, 14, 11,

1, 11, 16, 2, 16, 26, 22, 10, 0, 17, 25, 0, 11, 11, 0, 5, 8, 13,

20, 2, 26, 22, 19, 5, 6, 6, 10, 26, 2, 2, 1, 10, 11, 3, 17, 5,

21, 6, 5, 19, 26, 1, 4, 5, 19, 12, 2, 25, 21, 18, 17, 4, 15, 20,

24, 20, 5, 2, 23, 26, 11, 18, 14, 14, 0, 26, 20, 6, 19, 19, 12, 6,

25, 21, 23, 18, 1, 17, 22, 18, 5, 1, 16, 15, 10, 5, 15, 2, 4, 19,

8, 14, 19, 13, 1, 3, 19, 9, 11, 26, 10, 18, 21, 8, 13, 24, 15, 2,

24, 2])]

I tried to convert this list into NumPy array but it gives me another error that

“ValueError: only one element tensors can be converted to Python scalars”

What is the solution for the above? Any suggestions are welcome.

Thanks in advance.