Thanks a lot, I found which layers are detached!

Cool! What was the issue as I couldn’t find it by skimming through the code?

hi ptrblck,I have the same problem with Naruto,and the training part of the code is similar, the model is modified on the basis of Unet model, during training it returns writer.add_histogram(‘grads/’ + tag, value.grad.data.cpu().numpy(), global_step)

AttributeError: ‘NoneType’ object has no attribute ‘data’

def train_net(net,

device,

epochs=5,

batch_size=1,

lr=0.001,

val_percent=0.7,

save_cp=True,

img_scale=0.5):

writer = SummaryWriter(comment=f'LR_{lr}_BS_{batch_size}_SCALE_{img_scale}')

global_step = 0

logging.info(f'''Starting training:

Epochs: {epochs}

Batch size: {batch_size}

Learning rate: {lr}

Checkpoints: {save_cp}

Device: {device.type}

Images scaling: {img_scale}

''')

optimizer = optim.Adam(net.parameters(), lr=lr, weight_decay=1e-4)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer, 'min' if net.n_classes > 1 else 'max', patience=2)

if net.n_classes > 1:

criterion = nn.CrossEntropyLoss()

else:

criterion = nn.BCELoss()

cudnn.benchmark = True

img_ids = [splitext(file)[0] for file in listdir(dir_img) if

not file.startswith('.')]

train_img_ids, val_img_ids = train_test_split(img_ids, test_size=val_percent, random_state=41)

train_data_name = []

val_data_name = []

time_get_data = time.strftime("%Y_%m_%d", time.localtime())

with open(os.path.join(os.getcwd(), "train_{}.txt".format(time_get_data)), "w") as f:

for x in range(len(train_img_ids)):

f.write('{}\n'.format(train_img_ids[x]))

train_data_name.append(train_img_ids[x])

f.close()

with open(os.path.join(os.getcwd(), "test_{}.txt".format(time_get_data)), "w") as f:

for y in range(len(val_img_ids)):

f.write('{}\n'.format(val_img_ids[y]))

val_data_name.append(val_img_ids[y])

f.close()

for epoch in range(epochs):

train = BasicDataset(train_img_ids, dir_img, dir_mask, img_scale, transform=transform_image)

val = BasicDataset(val_img_ids, dir_img, dir_mask, img_scale, transform=None)

train_loader = DataLoader(train,

batch_size=batch_size,

shuffle=True,

num_workers=0,

pin_memory=True)

val_loader = DataLoader(val,

batch_size=batch_size,

shuffle=False,

num_workers=0,

pin_memory=True,

drop_last=True)

net.train()

n_train = len(train_img_ids)

epoch_loss = 0

with tqdm(total=n_train, desc=f'Epoch {epoch + 1}/{epochs}', unit='img') as pbar: # 进度条设置

for batch in train_loader:

imgs = batch['image']

true_masks = batch['mask']

assert imgs.shape[1] == net.n_channels, \

f'Network has been defined with {net.n_channels} input channels, ' \

f'but loaded images have {imgs.shape[1]} channels. Please check that ' \

'the images are loaded correctly.'

imgs = imgs.to(device=device, dtype=torch.float32)

mask_type = torch.float32 if net.n_classes == 1 else torch.long

true_masks = true_masks.to(device=device, dtype=mask_type)

masks_pred = net(imgs)

smooth = 1e-5

num = true_masks.size(0)

masks_pred_dice = masks_pred.view(num, -1)

true_masks_dice = true_masks.view(num, -1)

intersection = (masks_pred_dice * true_masks_dice)

a = masks_pred_dice.sum(1)

dice = (2. * intersection.sum(1) + smooth) / (masks_pred_dice.sum(1) + true_masks_dice.sum(1) + smooth)

b =dice.sum()

dice = 1 - dice.sum() / num

loss = 0.5 * criterion(masks_pred, true_masks) + dice

epoch_loss += loss.item()

writer.add_scalar('Loss/train', loss.item(), global_step)

pbar.set_postfix(**{'loss (batch)': loss.item()})

optimizer.zero_grad()

loss.backward()

nn.utils.clip_grad_value_(net.parameters(), 0.1)

optimizer.step()

pbar.update(imgs.shape[0])

global_step += 1

if global_step % (n_train // (-1 * batch_size)) == 0:

for tag, value in net.named_parameters():

tag = tag.replace('.', '/')

writer.add_histogram('weights/' + tag, value.data.cpu().numpy(), global_step)

writer.add_histogram('grads/' + tag, value.grad.data.cpu().numpy(), global_step)

val_score = eval_net(net, val_loader, device)

scheduler.step(val_score)

writer.add_scalar('learning_rate', optimizer.param_groups[0]['lr'], global_step)

if net.n_classes > 1:

logging.info('Validation cross entropy: {}'.format(val_score))

writer.add_scalar('Loss/test', val_score, global_step)

else:

logging.info('Validation Dice Coeff: {}'.format(val_score))

writer.add_scalar('Dice/test', val_score, global_step)

writer.add_images('images', imgs, global_step)

if net.n_classes == 1:

writer.add_images('masks/true', true_masks, global_step)

writer.add_images('masks/pred', masks_pred > 0.5, global_step)

if save_cp:

try:

os.mkdir(dir_checkpoint)

logging.info('Created checkpoint directory')

except OSError:

pass

torch.save(net.state_dict(),

dir_checkpoint + f'CP_epoch{epoch + 1}.pth')

logging.info(f'Checkpoint {epoch + 1} saved !')

writer.close()

Attunet:

class conv_block(nn.Module):

def __init__(self, in_size, out_size):

super(conv_block, self).__init__()

self.conv1 = nn.Sequential(

nn.Conv2d(in_size, out_size, 3, 1, 1), nn.BatchNorm2d(out_size), nn.ReLU()

)

self.conv2 = nn.Sequential(

nn.Conv2d(out_size, out_size, 3, 1, 1), nn.BatchNorm2d(out_size), nn.ReLU()

)

def forward(self, inputs):

outputs1 = self.conv1(inputs)

outputs = self.conv2(outputs1)

return outputs

class upconv(nn.Module):

def __init__(self, in_size, out_size):

super(upconv, self).__init__()

self.up = nn.Sequential(

nn.Upsample(scale_factor=2, mode='bilinear', align_corners=True),

nn.Conv2d(in_size, out_size, 3, 1, 1),

nn.ReLU()

)

def forward(self, inputs1):

outputs1 = self.up(inputs1)

return outputs1

class Attention_block(nn.Module):

def __init__(self, F_g, F_l, F_int):

super(Attention_block, self).__init__()

self.W_g = nn.Sequential(

nn.Conv2d(F_g, F_int, kernel_size=1, stride=1, bias=True),

nn.BatchNorm2d(F_int)

)

self.W_x = nn.Sequential(

nn.Conv2d(F_l, F_int, kernel_size=1, stride=1, bias=True),

nn.BatchNorm2d(F_int)

)

self.psi = nn.Sequential(

nn.Conv2d(F_int, 1, kernel_size=1, stride=1, bias=True),

nn.BatchNorm2d(1),

nn.Sigmoid()

)

self.relu = nn.ReLU(inplace=True)

def forward(self, input_g, input_x):

input_g1 = self.W_g(input_g)

input_x1 = self.W_g(input_x)

psi = self.relu(input_g1+input_x1)

psi = self.psi(psi)

return input_x*psi

class AttUnet(nn.Module):

def __init__(

self, feature_scale=4, n_classes=21, in_channels=3, deep_supervision=False

):

super(AttUnet, self).__init__()

self.deep_supervision = deep_supervision

self.feature_scale = feature_scale

self.n_classes = n_classes

self.n_channels = in_channels

filters =[8, 16, 32, 64, 128]

#downsampling

self.conv1 = conv_block(self.n_channels, filters[0])

self.maxpool1 = nn.MaxPool2d(kernel_size=2)

self.conv2 = conv_block(filters[0], filters[1])

self.maxpool2 = nn.MaxPool2d(kernel_size=2)

self.conv3 = conv_block(filters[1], filters[2])

self.maxpool3 = nn.MaxPool2d(kernel_size=2)

self.conv4 = conv_block(filters[2], filters[3])

self.maxpool4 = nn.MaxPool2d(kernel_size=2)

self.conv5 = conv_block(filters[3], filters[4])

self.up_concat4 = upconv(filters[4], filters[3])

self.Att4 = Attention_block(F_g=filters[3], F_l=filters[3], F_int=filters[2])

self.upconv4 = conv_block(filters[4], filters[3])

self.up_concat3 = upconv(filters[3], filters[2])

self.Att3 = Attention_block(F_g=filters[2], F_l=filters[2], F_int=filters[1])

self.upconv3 = conv_block(filters[3], filters[2])

self.up_concat2 = upconv(filters[2], filters[1])

self.Att2 = Attention_block(F_g=filters[1], F_l=filters[1], F_int=filters[0])

self.upconv2 = conv_block(filters[2], filters[1])

self.up_concat1 = upconv(filters[1], filters[0])

self.Att1 = Attention_block(F_g=filters[0], F_l=filters[0], F_int=filters[0]//2)

self.upconv1 = conv_block(filters[1], filters[0])

self.final = nn.Conv2d(filters[0], n_classes, 1)

def forward(self, inputs):

conv1 = self.conv1(inputs)

maxpool1 = self.maxpool1(conv1)

conv2 = self.conv2(maxpool1)

maxpool2 = self.maxpool2(conv2)

conv3 = self.conv3(maxpool2)

maxpool3 = self.maxpool3(conv3)

conv4 = self.conv4(maxpool3)

maxpool4 = self.maxpool4(conv4)

conv5 = self.conv5(maxpool4)

d4 = self.up_concat4(conv5)

att4 = self.Att4(input_g=d4, input_x=conv4)

m4 = torch.cat((att4, d4), dim=1)

n4 = self.upconv4(m4)

d3 = self.up_concat3(n4)

att3 = self.Att3(input_g=d3, input_x=conv3)

m3 = torch.cat((att3, d3), dim=1)

n3 = self.upconv3(m3)

d2 = self.up_concat2(n3)

att2 = self.Att2(input_g=d2, input_x=conv2)

m2 = torch.cat((att2, d2), dim=1)

n2 = self.upconv2(m2)

d1 = self.up_concat1(n2)

att1 = self.Att1(input_g=d1, input_x=conv1)

m1 = torch.cat((att1, d1), dim=1)

n1 = self.upconv1(m1)

final = self.final(n1)

output = torch.sigmoid(final)

return (output)

Maybe there is a problem with my weight, but I can’t find it, can you check it for me, thank you very much!

The error is raised, if the current parameter does not contain a valid gradient.

(Besides that you should not use the .data attribute and can remove it.)

To debug it, check the .grad attribute of all parameters after the backward call and check which parameter doesn’t have a gradient.

Depending on your use case this might be expected (e.g. if the parameter wasn’t used in the corresponding forward pass) or it could be a bug in your code (e.g. you’ve detached the graph accidentally).

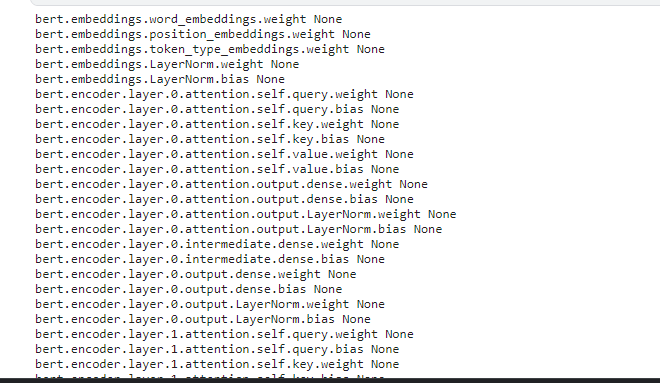

Hi, ptrblck. I still don’t know what happen when I use ChildTuning in my code. I check the parameters in each layer and try to figure out which layer has problem. But all layers grad = none. Would you mind to help me debug? Thanks.

config={

"max_len":512,

"train_batch":8,

"valid_batch":8,

"epochs":4,

"model_path":"bert-base-uncased",

"train_path":"../input/copilot/incoming.csv",

'lr':5e-5

}

class SentimentDataset():

def __init__(self,text,target):

self.text=text

self.target=target

self.tokenizer=transformers.BertTokenizer.from_pretrained(config['model_path'],do_lower_case=True)

def __len__(self):

return len(self.text)

def __getitem__(self,index):

text=str(self.text[index])

text=" ".join(text.split())

target=self.target[index]

input=self.tokenizer.encode_plus(

text,

None,

max_length=config['max_len'],

truncation=True,

pad_to_max_length=True,

)

ids=input['input_ids']

mask=input['attention_mask']

token_type_ids=input['token_type_ids']

return {

"ids":torch.tensor(ids,dtype=torch.long),

"masks":torch.tensor(mask,dtype=torch.long),

"token_type_ids":torch.tensor(token_type_ids,dtype=torch.long),

"target":torch.tensor(target,dtype=torch.float)

}

class Bertmodel(nn.Module):

def __init__(self):

super(Bertmodel,self).__init__()

self.bert=transformers.BertModel.from_pretrained(config['model_path'])

# freeze the up-flow layers

for param in self.bert.parameters():

param.requires_grad = True

self.dropout=nn.Dropout(0.6)

self.fc=nn.Linear(768,3)

# self.fc0=nn.Linear(768,768)

# self.dropout1=nn.Dropout(0.6)

# self.fc1=nn.Linear(768,768)

# self.dropout2=nn.Dropout(0.3)

# self.fc2=nn.Linear(768,768)

# self.dropout3=nn.Dropout(0.3)

# self.fc3=nn.Linear(768,3)

def forward(self,ids,mask,token_type):

_,x=self.bert(ids,attention_mask=mask,token_type_ids=token_type,return_dict=False)

x=self.dropout(x)

x=self.fc(x)

# x=self.fc0(x)

# x=self.dropout1(x)

# x=self.fc1(x)

# x=self.dropout2(x)

# x=self.fc2(x)

# x=self.dropout3(x)

# x=self.fc3(x)

return x

def loss_fn(output,target):

loss=nn.CrossEntropyLoss()(output,target)

return loss

def train_fn(data_loader,model,optimizer,device,scheduler):

model.train()

trainloss=[]

for step,data in enumerate(data_loader):

ids=data['ids']

masks=data['masks']

token_type=data['token_type_ids']

target=data['target']

ids=ids.to(device,dtype=torch.long)

masks=masks.to(device,dtype=torch.long)

token_type=token_type.to(device,dtype=torch.long)

target=target.to(device,dtype=torch.long)

optimizer.zero_grad()

preds=model(ids,masks,token_type)

loss=loss_fn(preds,target)

loss.backward()

optimizer.step()

scheduler.step()

trainloss.append(loss.item())

loss = np.average(trainloss)

return loss

def eval_fn(data_loader,model,device):

fin_targets=[]

fin_outputs=[]

valloss = []

valacc = []

model.eval()

with torch.no_grad():

for data in data_loader:

ids=data['ids']

masks=data['masks']

token_type=data['token_type_ids']

target=data['target']

ids=ids.to(device,dtype=torch.long)

masks=masks.to(device,dtype=torch.long)

token_type=token_type.to(device,dtype=torch.long)

target=target.to(device,dtype=torch.long)

preds=model(ids,masks,token_type)

target=target.cpu().detach()

preds=preds.cpu().detach()

# target=target.cpu()

# preds=preds.cpu()

fin_preds = torch.argmax(preds,1)

fin_preds=fin_preds.cpu().detach()

# fin_preds=fin_preds.cpu()

accuracy=metrics.accuracy_score(target,fin_preds)

loss=loss_fn(preds,target)

valloss.append(loss.item())

valacc.append(accuracy)

accuracy=np.average(valacc)

loss = np.average(valloss)

return loss, accuracy# we can return accuracy, if we want

device=torch.device("cuda")

# device=torch.device("cpu")

model=Bertmodel()

model.to(device)

df=pd.read_csv(config["train_path"]).fillna("none")

column_dict = {"not interested": 0, "maybe": 1, "interested": 2}

df = df.replace({"Label": column_dict})

def train(df):

df_train,df_valid=train_test_split(df,test_size=0.2,random_state=42,stratify=df.Label.values)

df_train=df_train.reset_index(drop=True)

df_valid=df_valid.reset_index(drop=True)

train_dataset=SentimentDataset(df_train.Message.values,df_train.Label.values)

valid_dataset=SentimentDataset(df_valid.Message.values,df_valid.Label.values)

train_loader=torch.utils.data.DataLoader(train_dataset,batch_size=config['train_batch'],num_workers=4)

valid_loader=torch.utils.data.DataLoader(valid_dataset,batch_size=config['valid_batch'],num_workers=4)

num_train_steps=int(len(df_train)/config['train_batch']*config['epochs'])

# child tune

for p in model.parameters():

grad = p.grad.data

## child-tuning_f begin ##

reserve_p = 0.2 # hyperparameters: ratio of gradient that are reserved

grad_mask = Bernoulli(grad.new_full(size=grad.size(), fill_value=reserve_p))

grad *= grad_mask.sample() / reserce_p

## child-tuning_f end##

optimizer=torch.optim.AdamW(model.parameters(),lr=config['lr'])

scheduler = get_linear_schedule_with_warmup(

optimizer,

num_warmup_steps=0,

num_training_steps=num_train_steps

)

for epoch in range(config['epochs']):

loss_train=train_fn(train_loader,model,optimizer,device,scheduler)

print(f"Train_Loss-->> {loss_train}")

loss_eval, acc_eval =eval_fn(valid_loader,model,device)

print(f"Val_Loss-->> {loss_eval}")

print(f"Val_Acc-->>{acc_eval}")

train(df)

@ptrblck I would really appreciate it if you can help me out.

Hi ptrblck,

I have experienced similar problem and would really appreciate your help.

I am trying to include nn.embedding for my transformer model but experienced x_input.grad.data is NoneType error for x_grad = torch.sign(x_input.grad.data) in my create_augmented_data function.

Here is my code for my model:

class MLP(torch.nn.Module):

def __init__(self, num_fc_layers, num_fc_units, dropout_rate, dim_num_heads):

super().__init__()

if num_fc_units % dim_num_heads != 0:

num_fc_units = num_fc_units//dim_num_heads * dim_num_heads

embed_dim = num_fc_units

self.embedding = nn.Embedding(998, embed_dim).requires_grad_(True)

self.transformer_encoder_layer = nn.TransformerEncoderLayer(

d_model=embed_dim,

nhead=dim_num_heads, # Number of heads in the multiheadattention models

dim_feedforward=embed_dim,

dropout=0.1,

activation='relu'

)

self.transformer_encoder = nn.TransformerEncoder(self.transformer_encoder_layer, num_layers=num_fc_layers)

embed_dim = num_fc_units

self.layers = nn.ModuleList()

self.layers.append(nn.Linear(embed_dim, embed_dim))

self.layers.append(nn.ReLU(True))

self.layers.append(nn.Dropout(p=dropout_rate))

for i in range(num_fc_layers):

self.layers.append(nn.Linear(embed_dim, embed_dim))

self.layers.append(nn.ReLU(True))

self.layers.append(nn.Dropout(p=dropout_rate))

self.output_layer = (nn.Linear(embed_dim, 24))

def forward(self, x):

x = x.long()

x = self.embedding(x)

x = torch.transpose(x, 0, 1)

x = self.transformer_encoder(x)

x = torch.transpose(x, 0, 1)

x = torch.mean(x, dim=1)

for i in range(len(self.layers)):

x = self.layers[i](x)

x = self.output_layer(x)

return x

Code for create_augmented_data function:

def create_augmented_data(x_train, y_train, eps, model):

x_input = torch.from_numpy(x_train).float()

y_true = torch.from_numpy(y_train).long()

x_input = x_input.to(device)

y_true = y_true.to(device)

x_input = Variable(x_input, requires_grad=True)

y_true = Variable(y_true)

train_outputs = model(x_input)

for name, param in model.named_parameters():

if param.grad == None:

print(name, 'is None')

else:

print('param {}: {}'.format(name, param.grad.abs().sum()))

ad_loss = torch.nn.CrossEntropyLoss()

loss_cal = ad_loss(train_outputs, y_true)

loss_cal.backward(retain_graph=True)

x_grad = torch.sign(x_input.grad.data)

x_adversarial = x_input + eps * x_grad

x_aug = torch.cat([x_input, x_adversarial], dim=0)

y_aug = torch.cat([y_true, y_true], dim=0)

return x_aug, y_aug

Code for training:

model = MLP(num_fc_layers, num_fc_units, dropout_rate, num_heads).to(device)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, weight_decay=weight_decay)

for epoch in range(2):

# print("EPOCHS: {0}".format(epoch))

train_losses = []

correct = 0

loss = 0

total_predictions = 0

model.train()

for i, (x,y) in enumerate(train_loader):

x_input, y_input = x.numpy(), y.numpy()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

model.eval()

x_aug, y_aug = create_augmented_data(x_input, y_input, eps=1.0, model=model)

x_aug, y_aug = x_aug.to(device), y_aug.to(device)

model.train()

output = model(x_aug)

loss = criterion(output, y_aug)

train_losses.append(loss)

loss.backward()

optimizer.step()

pred = output.max(1, keepdim=True)[1]

correct+= pred.eq(y_aug.view_as(pred)).sum().item()

total_predictions += y_aug.size(0)

Attempt that I made to solve the problem include:

- Print for each step to spot where the problem is coming from, but everything seems to be fine up to this line

x_grad = torch.sign(x_input.grad.data),print(x_input.grad)before this line output None.

Variable: x_input.shape: torch.Size([32, 150])

Variable: y_true.shape: torch.Size([32])

train_outputs.shape: torch.Size([32, 24])

ad_loss: CrossEntropyLoss()

loss_cal: tensor(3.1756, grad_fn=<NllLossBackward0>)

x_input.grad None

- Print .require_grad for each model parameters after train_outputs = model(x_input), all parameters’ requires_grad values is True

for name, param in model.named_parameters():

print(name, param.requires_grad)

- Check if

x_inputis not a leaf tensor or if there are any other computation operation in between

# include this two line after loss_cal.backward(retain_graph=True)

print(x_input.grad_fn) # return None

print(x_input.is_leaf) # return True

- Check for .grad for all parameters, all param.grad is None.

for name, param in model.named_parameters():

if param.grad == None:

print(name, 'is None')

else:

print('param {}: {}'.format(name, param.grad.abs().sum()))

Thank you so much for your help!

Transforming a tensor to an integer type will detach it as integer types are not (usefully) differentiable:

x = x.long()

Also, Variables are deprecated since PyTorch 0.4, so you should remove them and use plain tensors instead.

Hi ptrblck,

Thank you so much for your help. I really appreciate it. I have removed the Variables and replaced x_input = torch.from_numpy(x_train).float() with x_input = torch.from_numpy(x_train).int() in the create_augmented_data function and removed x = x.long(), but got the same error.

I am wondering if int types are not differentiable, but nn.embedding requires int types, what should I do for this situation? I see some people do something like x = self.embedding(x.long()), but I still get the same error.

Thank you!

This is the case as mentioned before. Integer types are not differentiable since their gradient would be zero almost everywhere and undefined at the rounding point (or inf). @KFrank explains it in more detail here.

I see. Thank you, ptrblck. So, in this case, would restricting the embedding layer from backpropagation solve the error? If so, how would I go about doing it?

The issue is not caused by the embedding layer, but by trying to access the gradients of an integer input (which is not supported) in:

x_grad = torch.sign(x_input.grad.data)

Hi ptrblck,

I see. Thank you! Do you have any suggestions on how to fix this? I am not sure if there is a easy way. I am thinking about creating augmented data using the embedding output to avoid accessing the gradients of the integer input.

class MLP(torch.nn.Module):

def __init__(self, num_fc_layers, num_fc_units, dropout_rate, dim_num_heads):

super().__init__()

if num_fc_units % dim_num_heads != 0:

num_fc_units = num_fc_units//dim_num_heads * dim_num_heads

embed_dim = num_fc_units

self.embedding = nn.Embedding(998, embed_dim)

self.transformer_encoder_layer = nn.TransformerEncoderLayer(

d_model=embed_dim,

nhead=dim_num_heads, # Number of heads in the multiheadattention models

dim_feedforward=embed_dim,

dropout=0.1,

activation='relu'

)

self.transformer_encoder = nn.TransformerEncoder(self.transformer_encoder_layer, num_layers=num_fc_layers)

embed_dim = num_fc_units

self.layers = nn.ModuleList()

self.layers.append(nn.Linear(embed_dim, embed_dim))

self.layers.append(nn.ReLU(True))

self.layers.append(nn.Dropout(p=dropout_rate))

for i in range(num_fc_layers):

self.layers.append(nn.Linear(embed_dim, embed_dim))

self.layers.append(nn.ReLU(True))

self.layers.append(nn.Dropout(p=dropout_rate))

self.output_layer = (nn.Linear(embed_dim, 24))

def forward(self, x):

x = self.embedding(x.long())

if self.training: # Optionally, add noise only during training

noise = torch.randn_like(x) * 0.01 # Adjust noise level to your requirement

x += noise

x = torch.transpose(x, 0, 1)

x = self.transformer_encoder(x)

x = torch.transpose(x, 0, 1)

x = torch.mean(x, dim=1)

for i in range(len(self.layers)):

x = self.layers[i](x)

x = self.output_layer(x)

return x

Is this a reasonable approach. Are there any other alternative fix that I can try on?