I’m trying to input the features in 3 parallel model architecture( 2*CNN + transformer encoder).

# change nn.sequential to take dict to make more readable

class parallel_all_you_want(nn.Module): # base module

# Define all layers present in the network

def __init__(self, num_emotions):

super().__init__() # initializes internal module state.

################## TRANSFORMER BLOCK #####################

# MAXPOOL THE INPUT FEATURE MAP/TENSOR TO THE TRANSFORMER

# rectangular kernel worked better here for the rectangular input

self.transformer_maxpool = nn.MaxPool2d(kernel_size = [1, 4], stride = [1, 4])

# define single transformer encoder layer

# self-attention + feedforward network from "Attention is All you need"

# 4 multi-head self-attention layers earch with 40 -->512 --> 40 feedforward network

transformer_layer = nn.TransformerEncoderLayer(

d_model = 40, # input features (frequency) dim after maxpooling 40*282 -> 40*70 (MFC*time)

nhead = 4, # 4 self-attention layers in each multi-head self-attention

dim_feedforward = 512, # 2 layers in each encoder block's feedforward network: dim 40-->512--->40

dropout = 0.4,

activation = 'relu' # ReLU: avoid saturation/time gradient/ reduce compute time

)

# I'm using 4 instead of the 6 identical stacked encoder layrs used in Attention is All You Need paper

# Complete transformer block contains 4 full transformer encoder layers (each w/ multihead self-attention+feedforward)

self.transformer_encoder = nn.TransformerEncoder(transformer_layer, num_layers = 4)

# ======================== 1ST PARALLEL 2D CONVOLUTION BLOCK ===========================

# 3 sequential conv2D layers: (1,40,282) --> (16, 20, 141) -> (32, 5, 35) -> (64, 1, 8)

self.conv2Dblock1 = nn.Sequential(

# 1st 2D convolution layer

nn.Conv2d(

in_channels = 1, # input volume depth == input channel dim == 1

out_channels = 16, # expand output feature map volume's depth to 16

kernel_size = 3, # 3*3 stride 1 kernel

stride = 1,

padding = 1

),

nn.BatchNorm2d(16), # batch normalize the output feature map before activation

nn.ReLU(), # feature map --> activation map

nn.MaxPool2d(kernel_size = 2, stride = 2), #typical maxpool kernel size

nn.Dropout(p = 0.3),

# 2nd 2D convolution layer identical to last except output dim

nn.Conv2d(

in_channels = 16,

out_channels = 32, # expand output feature map volume's depth to 32

kernel_size = 3, # 3*3 stride 1 kernel

stride = 1,

padding = 1

),

nn.BatchNorm2d(32), # batch normalize the output feature map before activation

nn.ReLU(), # feature map --> activation map

nn.MaxPool2d(kernel_size = 4, stride = 4), # increase maxpool kernel size

nn.Dropout(p = 0.3),

# 3rd 2D convolution layer identical to last except output dim

nn.Conv2d(

in_channels = 32,

out_channels = 64, # expand output feature map volume's depth to 32

kernel_size = 3, # 3*3 stride 1 kernel

stride = 1,

padding = 1

),

nn.BatchNorm2d(64), # batch normalize the output feature map before activation

nn.ReLU(), # feature map --> activation map

nn.MaxPool2d(kernel_size = 4, stride = 4), # increase maxpool kernel size

nn.Dropout(p = 0.3),

)

# ======================== 2ND PARALLEL 2D CONVOLUTION BLOCK ===========================

# 3 sequential conv2D layers: ( 1, 40, 259) --> (16, 20, 141) -> (32, 5, 35) -> (64, 1, 8)

self.conv2Dblock2 = nn.Sequential(

# 1st 2D convolution layer

nn.Conv2d(

in_channels = 1, # input volume depth == input channel dim == 1

out_channels = 16, # expand output feature map volume's depth to 16

kernel_size = 3, # 3*3 stride 1 kernel

stride = 1,

padding = 1

),

nn.BatchNorm2d(16), # batch normalize the output feature map before activation

nn.ReLU(), # feature map --> activation map

nn.MaxPool2d(kernel_size = 2, stride = 2), #typical maxpool kernel size

nn.Dropout(p = 0.3),

# 2nd 2D convolution layer identical to last except output dim

nn.Conv2d(

in_channels = 16,

out_channels = 32, # expand output feature map volume's depth to 32

kernel_size = 3, # 3*3 stride 1 kernel

stride = 1,

padding = 1

),

nn.BatchNorm2d(32), # batch normalize the output feature map before activation

nn.ReLU(), # feature map --> activation map

nn.MaxPool2d(kernel_size = 4, stride = 4), # increase maxpool kernel size

nn.Dropout(p = 0.3),

# 3rd 2D convolution layer identical to last except output dim

nn.Conv2d(

in_channels = 32,

out_channels = 64, # expand output feature map volume's depth to 32

kernel_size = 3, # 3*3 stride 1 kernel

stride = 1,

padding = 1

),

nn.BatchNorm2d(64), # batch normalize the output feature map before activation

nn.ReLU(), # feature map --> activation map

nn.MaxPool2d(kernel_size = 4, stride = 4), # increase maxpool kernel size

nn.Dropout(p = 0.3),

)

# ====================== FINAL LINEAR BLOCK ==========================

# Linear softmax layer to take final concatenated embedding tensor

# from parallel 2D cnn and transformer block, output output 8 logits

# Full transformer block outputs 40*70 feature map, which we time-avg to dim 40 1D array

# 512*2+40 == 1064 input features --> 8 output emotions

self.fc1_linear = nn.Linear(512 * 2 + 40, num_emotions)

# SOFTMAX layer for the 8 output logits from final FC linear layer

self.softmax_out = nn.Softmax(dim = 1) # dim == 1 is frequency embedding

# define one complete parallel fwd pass of input features tensor through 2*conv+1*transformer blocks

def forward(self, x):

# =========== 1st parallel Conc2D block: 3 con layers =================

# create final feature embedding from 1st con layer

# input features passed through 3 sequential 2D convolutional layers

conv2d_embedding1 = self.conv2Dblock1(x) # x = features (x_train - N*C*B*H)

# flatten final 64*1*8 feature map from con layer to length 512 1D array

# skip the 1sst (N/batch) dimension when flattening

conv2d_embedding1 = torch.flatten(conv2d_embedding1, start_dim = 1)

# =========== 2nd parallel Conc2D block: 3 con layers =================

# create final feature embedding from 1st con layer

# input features passed through 3 sequential 2D convolutional layers

conv2d_embedding2 = self.conv2Dblock2(x) # x = features (x_train - N*C*B*H)

# flatten final 64*1*8 feature map from con layer to length 512 1D array

# skip the 1sst (N/batch) dimension when flattening

conv2d_embedding2 = torch.flatten(conv2d_embedding2, start_dim = 1)

# ============ 4-encoder-layer Transformer block =================

# maxpool input feature map: 1*40*282 w/ 1*4 kernel --> 1*40*70

x_maxpool = self.transformer_maxpool(x)

# remove channel dim: 1*40*70 --> 40*70

x_maxpool_reduced = torch.squeeze(x_maxpool, 1) # squeeze removes single dimension entries

# convert maxpooled feature map format: batch * freq * time --> time * batch * freq format

# because transformer encoder layer requires tensor in format: time * batch * embedding (freq)

x = x_maxpool_reduced.permute(2, 0, 1)

# finally, pass reduced input feature map 'x' into transformer encoder

transformer_output = self.transformer_encoder(x)

## create final feature embedding from transformer layer by taking mean in the time dimension (now the 0th dim)

# transformer outputs 2x40 (MFCC embedding*time) feature map, take mean of columns i.e. take time average

transformer_embedding = torch.mean(transfomer_output, dim = 0)

# ============ concatenate frequency embeddings form con and transformer block ===============

# concatenate tensors output by parallel 2*conv and 1*transformer blocks

complete_embedding = torch.cat([conv2d_embedding1, conv2d_embedding2, transformer_embedding], dim = 1)

# ============ final FC linear layer, need logits for loss =================

output_logits = self.fc1_linear(complete_embedding)

# ============ final softmax layer: use logits from FC linear, get softmax for prediction ==========

output_softmax = self.softmax_out(output_logits)

# need output logits to compute cross entropy loss, need softmax probabilities to predict class

return output_logits, output_softmax

# need device to instantiate model

device = 'cuda'

# instantiate model

model = parallel_all_you_want(len(emotions_dict)).to(device)

# include input feature map dims in call to summary()

summary(model, input_size=(1, 40, 259))

x_train input shape = X_train:(26727, 1, 40, 259), y_train:(26727,)

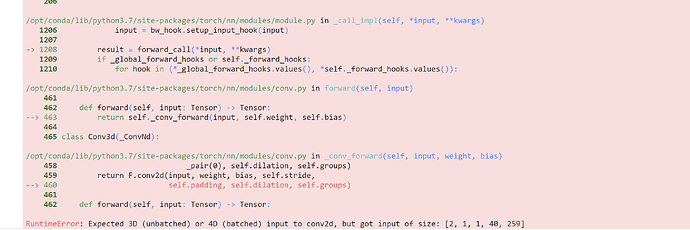

I’m getting error:

AttributeError: 'NoneType' object has no attribute 'size'