Hello. Can you help me with understanding of features attributions?

I suppose, that they are connected to the feature importance, yes?

I looked through California Housing example (Captum · Model Interpretability for PyTorch) and Titanic (Captum · Model Interpretability for PyTorch). In both attributions (IntegratedGradients, for example) are calculated for each data sample, and then averaged for the whole dataset. If final value is close to zero - this means that feature is not very important, yes?

But in Titanic there is a note, where larger magnitude attributions correspond to the examples with larger Sibsp feature values, suggesting that the feature has a larger impact on prediction for these examples. (See section In [19], sorry I can put only 1 image in this message)

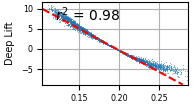

Now I’m looking at my data and see, that attribution have a strong dependence from the parameter value, but in average - it is close to zero. How to interpret that? Can you give me a clue?

(x-axis is a parameter value, y-axis is a DeepLift value here, but IG performs in the same manner)