The current implementation of autocast will drop the cache between consecutive forward passes while training (for example using pytorch lightning).

This is because autocast should not be used during the backward pass (autocasting doc

When all layers are unique in the model, there will be a call to aten:to / aten:copy on all weights at every foward pass.

I was wondering if it would be possible to keep one cache instance during training.

As the cache is a copy of the weights, I would expect the backward pass to not update these values directly, but would it be theoretically possible to support such use case?

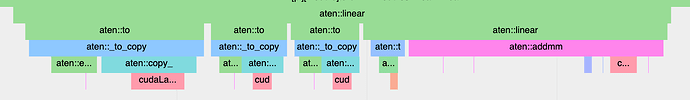

The image is an example where the linear layer calls aten:to 3 times for its input, weight and bias.