I was training an autoencoder to reconstruct black and white images of 128x128 pixels.

I used an architecture from one github repo, more precisely the encoder and decoder in:

Encoder and Decoder

and the autoencoder in:

RAE-L2

Minor changes had to be made to support 128x128 images.

I present the code below:

Encoder

class Encoder(nn.Module):

def __init__(self, encoded_space_dim):

super().__init__()

self.input_dim = (1, 128, 128)

self.latent_dim = encoded_space_dim

self.n_channels = 1

layers = nn.ModuleList()

layers.append(nn.Sequential(nn.Conv2d(self.n_channels, 64, 4, 2, padding=1)))

layers.append(nn.Sequential(nn.Conv2d(64, 128, 4, 2, padding=1)))

layers.append(nn.Sequential(nn.Conv2d(128, 128, 3, 2, padding=1)))

layers.append(

nn.Sequential(

ResBlock(in_channels=128, out_channels=32),

ResBlock(in_channels=128, out_channels=32),

)

)

self.layers = layers

self.depth = len(layers)

self.embedding = nn.Linear(128 * 16 * 16, encoded_space_dim)

def forward(self, x, output_layer_levels: List[int] = None):

output = ModelOutput()

max_depth = self.depth

if output_layer_levels is not None:

assert all(

self.depth >= levels > 0 or levels == -1

for levels in output_layer_levels

), (

f"Cannot output layer deeper than depth ({self.depth})."

f"Got ({output_layer_levels})."

)

if -1 in output_layer_levels:

max_depth = self.depth

else:

max_depth = max(output_layer_levels)

out = x

for i in range(max_depth):

out = self.layers[i](out)

if output_layer_levels is not None:

if i + 1 in output_layer_levels:

output[f"embedding_layer_{i+1}"] = out

if i + 1 == self.depth:

output["embedding"] = self.embedding(out.reshape(x.shape[0], -1))

return output

Decoder:

class Decoder(nn.Module):

def __init__(self, encoded_space_dim):

super().__init__()

self.input_dim = (1, 128, 128)

self.latent_dim = encoded_space_dim

self.n_channels = 1

layers = nn.ModuleList()

layers.append(nn.Linear(encoded_space_dim, 128 * 16 * 16))

layers.append(nn.ConvTranspose2d(128, 128, 3, 2, padding=1, output_padding=1))

layers.append(

nn.Sequential(

ResBlock(in_channels=128, out_channels=32),

ResBlock(in_channels=128, out_channels=32),

nn.ReLU(),

)

)

layers.append(

nn.Sequential(

nn.ConvTranspose2d(128, 64, 3, 2, padding=1, output_padding=1),

nn.ReLU(),

)

)

layers.append(

nn.Sequential(

nn.ConvTranspose2d(

64, self.n_channels, 3, 2, padding=1, output_padding=1

),

nn.Sigmoid(),

)

)

self.layers = layers

self.depth = len(layers)

def forward(self, x, output_layer_levels: List[int] = None):

output = ModelOutput()

max_depth = self.depth

if output_layer_levels is not None:

assert all(

self.depth >= levels > 0 or levels == -1

for levels in output_layer_levels

), (

f"Cannot output layer deeper than depth ({self.depth})."

f"Got ({output_layer_levels})"

)

if -1 in output_layer_levels:

max_depth = self.depth

else:

max_depth = max(output_layer_levels)

out = x

for i in range(max_depth):

out = self.layers[i](out)

if i == 0:

out = out.reshape(x.shape[0], 128, 16, 16)

if output_layer_levels is not None:

if i + 1 in output_layer_levels:

output[f"reconstruction_layer_{i+1}"] = out

if i + 1 == self.depth:

output["reconstruction"] = out

return output

Autoencoder:

class RAE_L2(nn.Module):

def __init__(self, latent_dim=16):

super(RAE_L2, self).__init__()

self.latent_dim = latent_dim

self.encoder = Encoder(latent_dim)

self.decoder = Decoder(latent_dim)

self.model_name = "RAE_L2"

def forward(self, x, **kwargs):

z = self.encoder(x).embedding

recon_x = self.decoder(z)["reconstruction"]

loss, recon_loss, embedding_loss = self.loss_function(recon_x, x, z)

return recon_x, loss

def loss_function(self, recon_x, x, z):

recon_loss = torch.nn.functional.mse_loss(

recon_x.reshape(x.shape[0], -1), x.reshape(x.shape[0], -1), reduction="none"

).sum(dim=-1)

embedding_loss = 0.5 * torch.linalg.norm(z, dim=-1) ** 2

return (

(recon_loss + 1e-2 * embedding_loss).mean(

dim=0

),

(recon_loss).mean(dim=0),

(embedding_loss).mean(dim=0),

)

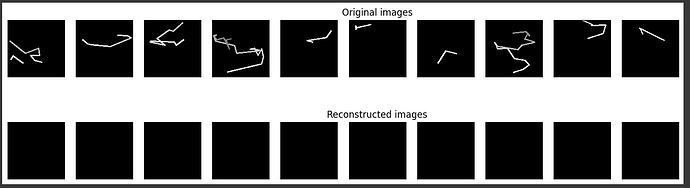

However after training the results were below expectations, at the end of 30 epochs the resulting loss was 16214.4103 and the reconstructions were something like:

Is there something missing from the autoencoder architecture?