Hi Everyone,

I asked this question on social media

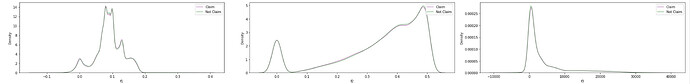

I am working on dimensional reduction techniques and chose DAE Autoencoder as one of techniques. Reason for chosing this as it works well with linear and non linear data. I have 120 features with almost one million records. Denoising Autoencoder (DAE) give me a latent space of 80 features - what it means is i reduced features from 120 to 80 , 80 is an arbitrary number and checked with other random number as well , is there any systematic approach to chose the size of latent space ? Now the real problem is binary classification prediction 1 or 0. After getting this latent space 80 and feed into downstream algorithm Light GBM , i don’t see any improvement in model accuracy , without latent space i mean plain LGBM with 120 features LGBM give me 80% accuracy, with latent space 80 LGBM give me 55% accuracy. Target class is balanced 50/50 , reason for going features reduction is to avoid over-fitting and more simpler model. I ran down feature selection technique as well and almost all features are contributing fair with combination of other features to predict target feature 1/0, that is the reason i chose DAE , i also checked all features are completely overlapped between target feature 1 and 0 as shown below in attached. Any idea how can i reduce these features from 120 to atleast 80 , PCA is crashing as it requires more memory which i don’t have at this stage, autoencoder seems to me promising in terms of speed , memory and has capability to work on non linear as well as linear , while PCA required linear. Anyone can suggest me why autoencoder is not converging well? i tried with different layers with different number of neurons but no luck , please share any idea which covers Autoencoder - Thanks

Someone has replied

Add a separate classification head to your decoder and train on a combined loss (say 50% reconstruction loss + 50% classification loss)

I did not get it and asked to that same guy what does mean by classification head and how can i add a separate classification head to decoder and train on a combined loss (say 50% reconstruction loss + 50% classification loss)?

Any body has idea please give me some dummy code and why we need it?