Introduction:

I am trying to make an autoencoder learn 32 features like position, velocity, etc in 32 time steps => 32x32 ‘image’.

For this I just made a simple linear model that uses in every layer the Tanh function with an encoder and a decoder that are symmetric.

During training I added my own version of dropout for just the input. (in the future I will use the nn.Dropout)

Problem:

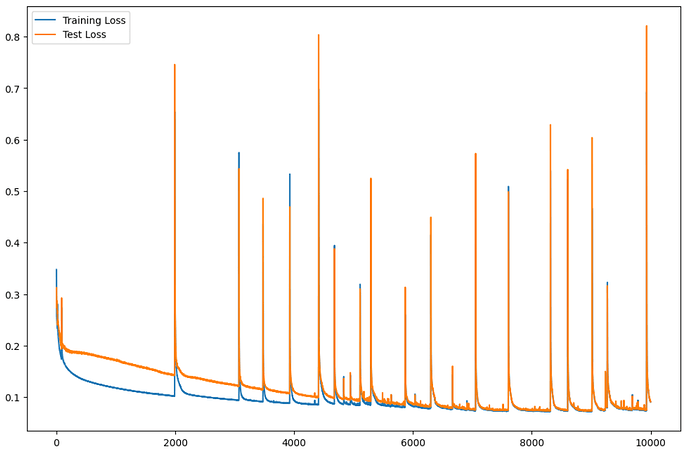

I get large spikes in loss function “sqrt(MSE)” at irregular intervals. (Batch_Size = 6000)

What I have tried: (small test, 1000 epochs max)

- clip_grad_norm_(model.parameters(), max_norm = 0.5).

- ReLu and ELU activation function

- Batch = N / 2 (I wanted to do N but the memory of my gpu was not enough)

- Not adding noise or dropout (the noise/dropout I think helps but does not solve the problem)

Nothing has worked.

Can someone explain to me why this happens and how to fix it?

Thanks a lot in advance, I really do appreciate.

Jupyter notebook:

Edit: Added code for getting gradient’s norm over epochs graph (without noise/dropout)