Hi,

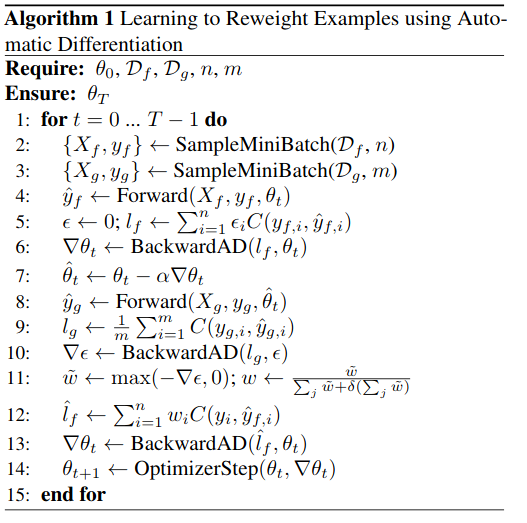

I’m trying to implement the algorithm described here.

A short description of the related part is as follows.

My attempt is as follows.

# 1. Forward-backward pass on training data

_, (inputs, labels) = next(enumerate(train_loader))

inputs, labels = inputs.to(device=args.device, non_blocking=True),\

labels.to(device=args.device, non_blocking=True)

meta_model.load_state_dict(model.state_dict())

y_hat_f = meta_model(inputs)

criterion.reduction = 'none'

l_f = criterion(y_hat_f, labels)

eps = torch.rand(l_f.size(), requires_grad=False, device=args.device).div(1e6)

eps.requires_grad = True

l_f = torch.sum(eps * l_f)

# 2. Compute grads wrt model and update its params

l_f.backward(retain_graph=True)

meta_optimizer.step()

# 3. Forward-backward pass on meta data with updated model

_, (inputs, labels) = next(enumerate(meta_loader))

inputs, labels = inputs.to(device=args.device, non_blocking=True),\

labels.to(device=args.device, non_blocking=True)

y_hat_g = model(inputs)

criterion.reduction = 'mean'

l_g = criterion(y_hat_g, labels)

# 4. Compute grads wrt eps and update weights

eps_grads = torch.autograd.grad(l_g, eps)

.....

At this line:

eps_grads = torch.autograd.grad(l_g, eps)

I get an error saying,

RuntimeError: One of the differentiated Tensors appears to not have been used in the graph. Set allow_unused=True if this is the desired behavior.

If I set allow_used=True, it returns None to eps_grad.

As far as I can tell, autograd loses computation graph for some reason and doesn’t retain the information that eps was used in the computation of l_f, which in turn used in updating parameters of the model. So, l_g should be differentiable wrt eps but it doesn’t work here.

How can I solve this ?