Hi,

I use PyTorch’s automatic gradient function to compute the Jacobian and supply it to IPOPT to solve an NLP problem.

Now I want to take the Jacobian of the following equation:

def eval_g(x):

""" The system of non-linear equilibrium conditions

x[0]: Capital stock in the next period

"""

assert len(x) == nvar

# Consumption today

con = a * k**alpha * ls**(1-alpha) - x[0]

# Labor supply tomorrow

# ls_plus = np.empty(x5.shape)

ls_plus = torch.empty(x5.shape)

for aplus_idx, aplus_val in enumerate(aplus):

ls_plus[aplus_idx] = ls_compute(

k=x[0], A=aplus_val, alpha=alpha, psi=psi, theta=theta)

# Capital stock tomorrow

k_plusplus = torch.empty(aplus.shape)

for aplus_idx, aplus_val in enumerate(aplus):

state_plus = torch.tensor([x[0], aplus_val])[None, :].float()

observed_pred = likelihood(gp_kplus(state_plus))

# k_plusplus[aplus_idx] = observed_pred.mean

k_plusplus[aplus_idx] = torch.tensor(k)

# Consumption tomorrow

con_plus = aplus * x[0]**alpha * ls_plus**(1-alpha) - k_plusplus

# ------------------------------------------------------------------- #

# Euler equation

# ------------------------------------------------------------------- #

g0 = 1 / con - beta * alpha * torch.sum(omega5 * (

1 / con_plus * aplus * x[0]**(alpha-1) * ls_plus**(1-alpha)))

return np.array([g0])

Note that the equation that I want to compute the Jacobian is g0.

Then I compute the Jacobian of the above function with the following syntax:

def eval_jac_g(x, flag):

""" Numerical approximation of the Jacobian of the system of

non-linear equilibrium conditions

Jacobian is computed by the automatic gradient of PyTorch """

assert len(x) == nvar

"""

Big skip

"""

else:

# Automatic gradient by PyTorch

assert len(x) == nvar

_eval_jac_g = np.empty(nnzj)

for i in range(ncon):

x_tensor = torch.tensor(x, requires_grad=True)

eval_g_tensor = eval_g(x_tensor)[i]

grads = torch.autograd.grad(eval_g_tensor, x_tensor, create_graph=True)

for j in range(nvar):

_eval_jac_g[j + i*nvar] =grads[0][j].detach().numpy()

return _eval_jac_g

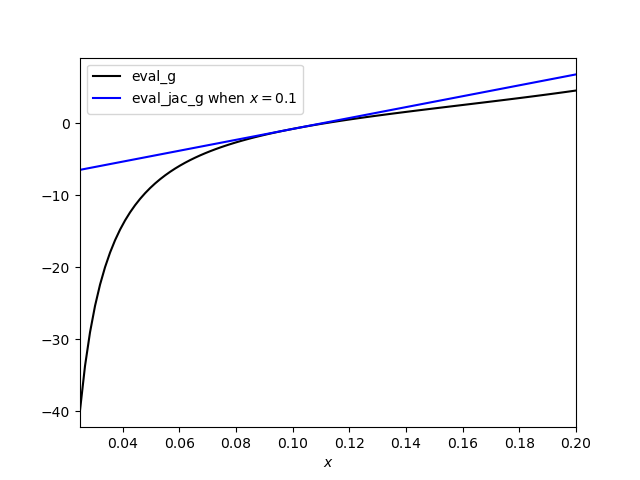

In my previous projects, I can compute the correct Jacobian, but without success in this time.

When I remove the items started from torch.sum(), it returns the correct Jacobian; therefore, I strongly suspect that I did something wrong in torch.sum().

How should I modify the code with torch.sum() to compute the correct Jacobian?

Any comments are highly appreciated.

Thank you in advance.

Takafumi