I am trying to manually derive and update the gradient for a simple nn .

input size is 12*12 ,apply 10*3*3 kernel ,so i have a conv layer with output features 10*10*10 ,and by flattening it to 1*1000 to create one dimension vector to connect to a fully connected layer with weight dimension 1000*2. I can get the fc layers weight to be updated , but how do I update weight value from 1000*2 matrix to 10*3*3 kernel matrix

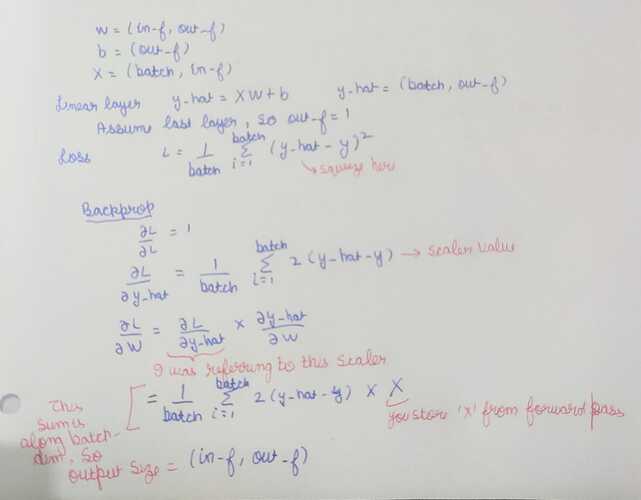

Your loss function contains average. When you backprop from loss to fc layers you will get a scaler value (a vector in case of batching) due the the average. During calculation of gradients of conv layer you will multiply the previous scaler with the grads of conv layer.

If you derive the equations then it will become clearer. If something needs more clarification, feel free to ask.

Hi kushaj ,thank you for your answer , but i am still confused , if back propagate from loss to fc layer weight ,which here is 10002 , how do we get a scalar ? shouldnt be still 10002 weight matrix with all the weight value updated ?