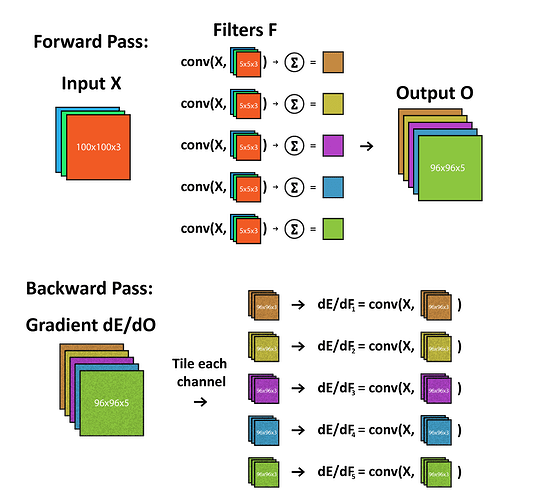

Let’s say a convolutional layer takes an input 𝑋 with dimensions of 5x100x100 and applies 10 filters 𝐹 5x5x5, thus produces an output 𝑂 10 feature maps 96x96.

During the backpropagation the layer receives 𝑑𝐸/𝑑𝑂 of shape 10x96x96.

My question is how to compute 𝑑𝐸/𝑑𝐹 ?

According to [that article]

(Forward And Backpropagation in Convolutional Neural Network. | by Sujit Rai | Medium)

𝑑𝐸/𝑑𝐹 can be calculated as convolution between 𝑋 and 𝑑𝐸/𝑑𝑂

Unfortunately, the article does not cover a case with multiple filters and multiple input channels.

Since 𝑋 has shape 5x100x100 and 𝑑𝐸/𝑑𝑂 has shape 10X96x96 the depth of 𝑋 equals to 5 and the depth of 𝑑𝐸/𝑑𝑂 equals to 10. So the depth dimension does not match. How to compute convolution in that case ?

Link for question on stackoverflow:neural network - An error with respect to filter weights in CNN during the backpropagation - Data Science Stack Exchange

The author posted a solution to this problem as shown in the image.But this shows that the gradient of all filters will be the same across their channels which I could not reproduce with my code?

Is the method wrong or is something wrong with my code?

import torch

import numpy as np

import matplotlib.pyplot as plt

import torch.nn as nn

import cv2

import matplotlib.pyplot as plt

ref_tensor1=torch.from_numpy(cv2.resize(cv2.imread("./trial_5.jpg",0).astype(np.float32),dsize=(225,225)))

ref_tensor1=ref_tensor1.unsqueeze(0).unsqueeze(0)

print(ref_tensor1.shape)

image1=cv2.imread("./trial_5.jpg").astype(np.float32)

image1=cv2.resize(image1,dsize=(256,256))

image1=np.rollaxis(image1,2)

image2=cv2.imread("./trial_8.jpg").astype(np.float32)

image2=cv2.resize(image2,dsize=(256,256))

image2=np.rollaxis(image2,2)

img_tensor2=torch.from_numpy(image2).unsqueeze(0)

img_tensor1=torch.from_numpy(image1).unsqueeze(0)

img_tensors=torch.cat((img_tensor1,img_tensor2),0)

print(img_tensors.shape)

print("Input_image_shape:",img_tensors.shape)

#print(img_tensors)

class torch_model1(torch.nn.Module):

def __init__(self,ic,oc,ks):

super(torch_model1,self).__init__()

self.conv1 = torch.nn.Conv2d(in_channels=ic,out_channels=oc,kernel_size=ks,stride=1)

def forward(self,x):

x = self.conv1(x)

return (x)

###1,3###

model1=torch_model1(3,3,32)

temp=torch.randn(img_tensors.shape)

op1=model1(img_tensor1)

print(op1.shape)

#assert(op1.shape==ref_tensor1.shape)

loss=torch.abs(op1-ref_tensor1).mean()

print(loss)

print("gradient_shape:",model1.conv1.weight.shape)

print("Before backprop:",model1.conv1.weight.grad)

loss.backward()

print("after backprop:",model1.conv1.weight.grad.shape)

#print("gradients:",model1.conv1.weight.grad)

print(model1.conv1.weight.grad)

##########RESULT(1,3,x,y):changes if seed is not set and the gradient is same for all channels#############

plt.subplot(131)

plt.imshow(model1.conv1.weight.grad[0,0,:,:])

plt.subplot(132)

plt.imshow(model1.conv1.weight.grad[0,1,:,:])

plt.subplot(133)

plt.imshow(model1.conv1.weight.grad[0,2,:,:])

plt.show()