Dear all,

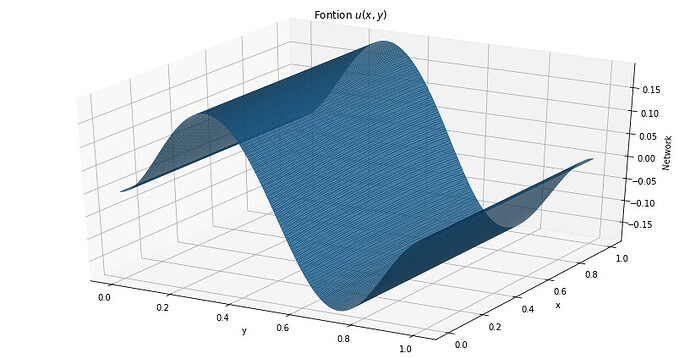

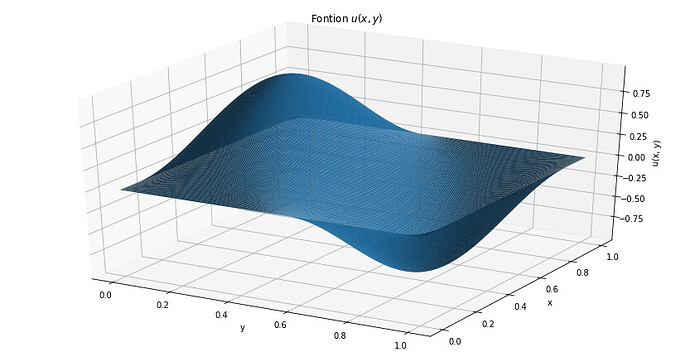

I have the following code which is used to train a neural function. u(x,y) is my function and I am trying to get it by minimizing the loss (-u’‘(x,y)-f)^2 where u’'(x,y) stands for Laplacian and f as well.

Everything is running fine but I have an issue with the backward() function which does not work here. The loss remains at the exact same level meaning that the neural network does not work.

Can someone help?

Thanks in advance

import torch

import torch.nn as nn

import numpy as np

learning_rate = 0.01

num_epochs = 15

N = 15

U = lambda x: torch.sin(torch.pi * x[0]) * torch.sin(2 * torch.pi * x[1])

f = lambda x, u: (5 * torch.pi**2* torch.sin(torch.pi * x[0]) * torch.sin(2 * torch.pi * x[1]))

x = np.arange(0, 1.1, 1/(N+1))[1:N+1]

p = np.array([])

for i in range(N):

p = np.append(p, x)

q = np.sort(p)

z = np.column_stack((p, q))

input = torch.tensor(z).to(dtype = torch.float32).requires_grad_(True)

class Net(nn.Module):

def init(self):

super(Net, self).init()

self.fc = nn.Sequential(nn.Linear(2, 64), nn.Tanh(), nn.Linear(64, 1, bias = False))

def forward(self, input):

x = input[0]

y = input[1]

input = self.fc(input)

return input * x * (1-x) * y * (1-y)

model = Net()

gradient = torch.optim.Adam(model.parameters(), lr = learning_rate)

def loss(x, U, f):

nablaU = torch.tensor([0]).to(dtype = torch.float32).requires_grad_(True)

output = model.forward(x)

Uneuronal_d = torch.autograd.grad(output, x, grad_outputs = torch.ones_like(output), allow_unused=True, retain_graph = True, create_graph=True)

nabla_x = torch.tensor([0])

for grad in Uneuronal_d:

grads_inner = torch.autograd.grad(grad, x, grad_outputs = torch.ones_like(x), create_graph=True, retain_graph=True, allow_unused=True)[0]

nabla_x = torch.tensor([torch.sum(nabla_x + grads_inner)])

nablaU = torch.cat((nablaU , torch.tensor([nabla_x])), dim=0)

return(torch.mean(nablaU[1:]))

for i in range(num_epochs):

gradient.zero_grad()

l = loss(input, U, f)

l.backward()

print(l)

gradient.step()