Hi,

I am running a pytorch module that involves multiple cond1d layers, contranspose1d layers, and module_list of linear layers. And the number of subjects in each batch is of the order of 10 (total samples = 100).

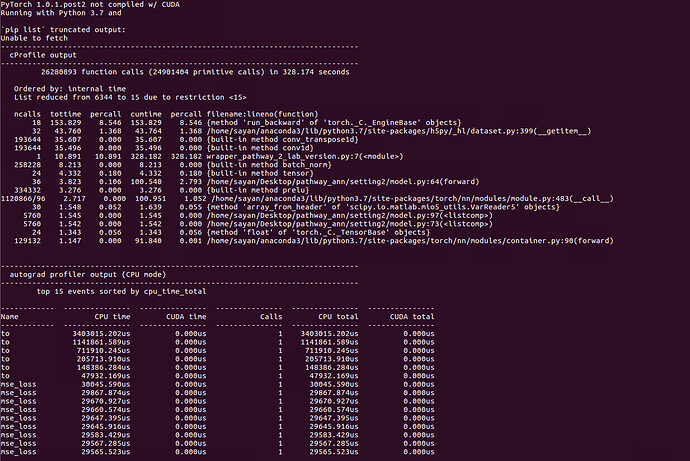

It is taking an incredible amount of time for each epoch. When I ran the code with torch.util.profiler I got the following result.

I’m not sure what’s going wrong. Also, I don’t really understand the function named “to” in the autograd profiler. Any help is really appreciated.

Thank you.