Hi,

I’ve been playing around with bits and pieces of nn code, trying to understand how to build neural nets from scratch. (Gone through the fast ai course; now really trying to understand torch and building from ground up)

There’s a really great channel on Youtube, that’s making a neural net library from scratch, and I’m trying to port over the example set up into torch, but I’m stumbling a bit.

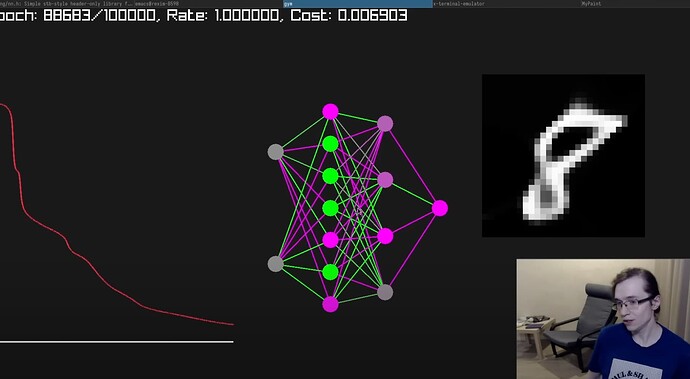

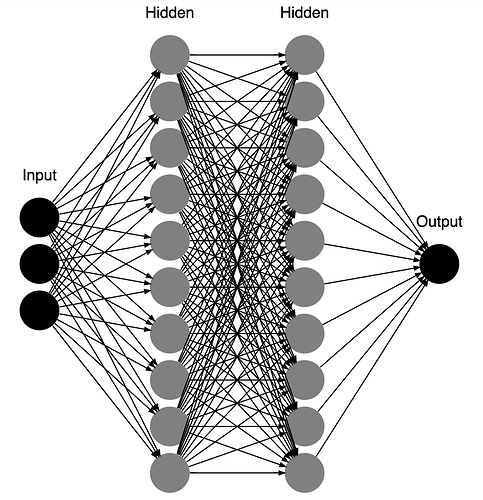

The idea is to take 2 of the mnist digits and a using a set of linear layers encode the images using sigmoid activations. End result would be so you can interpolate between the two images.

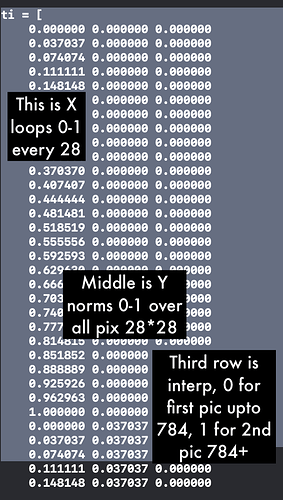

In the example he has on the yt channel, he uses three neurons in the first layer, 1st for X, 2nd for Y, and 3rd Neuron is the interpolator. The images are given markers 0 and 1, and the third neuron during inference controls the interpolation, so 0 = image 1, and 1 = image 2; 0.5 being a midway between shapes.

This is where I’ve got stuck trying to figure out, as using Torch we’re not encoding x and y into separate neurons. And i’m not entirely sure how I’d add this interpolation neuron

Has anyone got any idea or points in the right direction? And it seems like it works, but I don’t really get it, as during inference I just pass in the same data it was trained with. Or is that what an autoencoder is, same data in, same data out?

If anyone has literally any insights I’d be really greatful, as even this simple set up is kinda confusing to me.

This is my code so far:

from torch import nn

from torch.optim import Adam

from torchvision import datasets

from torchvision.transforms import ToTensor

import torch.nn.functional as F

import torch

from PIL import Image

from torchvision.transforms import ToPILImage

train = datasets.MNIST(root='data', download=True, train=True, transform=ToTensor())

im1 = train[0][0]

im2 = train[1][0]

img1 = ToPILImage()(im1)

img2 = ToPILImage()(im2)

img1.show()

img2.show()

totens = ToTensor()

img1tensor = totens(img1)

class NeuralNetwork(nn.Module):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 10),

nn.Sigmoid(),

# nn.ReLU(),

nn.Linear(10, 20),

nn.Sigmoid(),

# nn.ReLU(),

nn.Linear(20, 20),

nn.Sigmoid(),

# nn.ReLU(),

nn.Linear(20, 28*28),

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

# random_data = torch.rand((1, 1, 28, 28))

data = img1tensor

model = NeuralNetwork()

result = model(data) # run data through model to see if it works just passing through

print(result.shape)

result = result.reshape(28,28)

img = ToPILImage()(result)

img.show()

model = NeuralNetwork().to('cpu') # change to cuda if you want

print(model)

optimizer = Adam(model.parameters(), lr = 1e-1)

loss_func = nn.MSELoss()

loss = 0

for epoch in range(100):

optimizer.zero_grad() # reset the gradients back to zero

# compute reconstructions

outputs = model(data)

# print(outputs.shape, data.shape)

# compute training reconstruction loss

train_loss = loss_func(outputs, data.reshape(1,784))

# compute accumulated gradients

train_loss.backward()

# perform parameter update based on current gradients

optimizer.step()

# add the mini-batch training loss to epoch loss

loss += train_loss.item()

print(train_loss)

result = model(data) # run data through model to see if it works just passing through

result = result.reshape(28,28)

img = ToPILImage()(result)

img.show()

And this is the video I’m trying to port from : tsoding daily - nn from scratch