I realize there are similar questions particularly this one: Could someone explain batch_first=True in LSTM

However my question pertains to a specific tutorial I found online: Building RNNs is Fun with PyTorch and Google Colab | by elvis | DAIR.AI | Medium

I do not understand why they are not using batch_first=true.

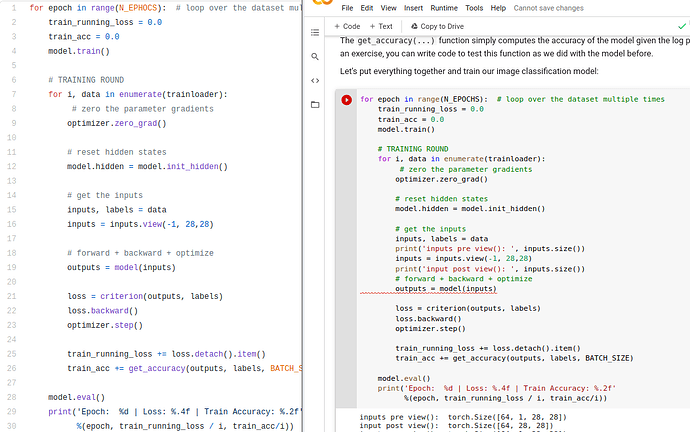

I realized that the data being fed to the model is of the form [64, 28, 28].

Because they are working with MNIST data and they specified a batch size of 64

-64 is batch size

-28 (number of rows) is seq_len

-28 (number of cols) is features

Could someone please explain why they are not using batch_first=True?

Thank you very much for your help.

edit: removed a part of question for simplicity