Hi,

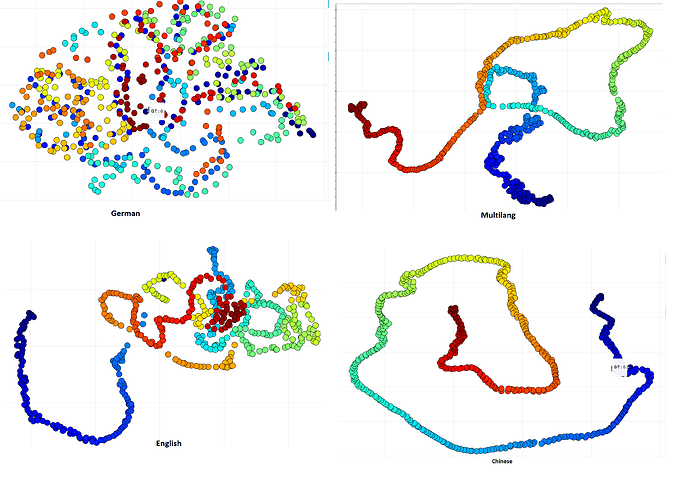

I just embedded the BERT positional embeddings into the 2D space (with umap) for different BERT models that are trained on different languages (I use “pytorch_transformers”).

It’s obvious that the embedded positional embeddings for the german model ist way more unstructred than for the other language models. Why is that? Is the german model not that well trained?

I also checked the word embeddings, these are looking good.