import torch.nn as nn

from transformers import BertModel

from data_generator import *

import torch

import math

import torch.nn.functional as F

SEED=0

torch.manual_seed(SEED)

torch.cuda.manual_seed_all(SEED)

torch.backends.cudnn.deterministic=True

torch.backends.cudnn.benchmark = False

class Residual(nn.Module):

def init(self, d, fn):

super(Residual, self).init()

self.fn = fn

self.projection = nn.Sequential(nn.Linear(d, d), fn, nn.Linear(d, d))

def forward(self, x):

return self.fn(x + self.projection(x))

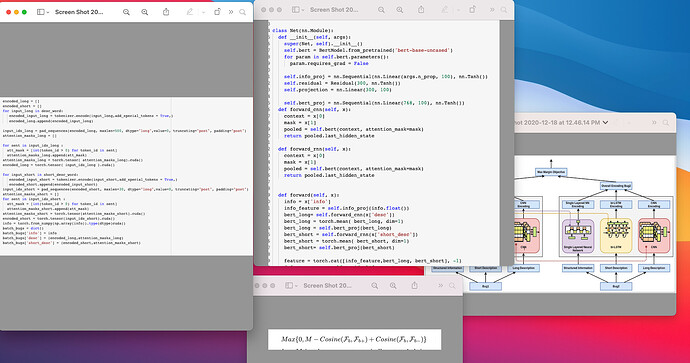

class Net(nn.Module):

def init(self, args):

super(Net, self).init()

self.bert = BertModel.from_pretrained(‘bert-base-uncased’)

for param in self.bert.parameters():

param.requires_grad = False

self.info_proj = nn.Sequential(nn.Linear(args.n_prop, 100), nn.Tanh())

self.residual = Residual(300, nn.Tanh())

self.projection = nn.Linear(300, 100)

self.bert_proj = nn.Sequential(nn.Linear(768, 100), nn.Tanh())

def forward_cnn(self, x):

context = x[0]

mask = x[1]

pooled = self.bert(context, attention_mask=mask)

return pooled.last_hidden_state

def forward_rnn(self, x):

context = x[0]

mask = x[1]

pooled = self.bert(context, attention_mask=mask)

return pooled.last_hidden_state

def forward(self, x):

info = x[‘info’]

info_feature = self.info_proj(info.float())

bert_long= self.forward_cnn(x[‘desc’])

bert_long = torch.mean( bert_long, dim=1)

bert_long = self.bert_proj(bert_long)

bert_short = self.forward_rnn(x[‘short_desc’])

bert_short = torch.mean( bert_short, dim=1)

bert_short= self.bert_proj(bert_short)

feature = torch.cat([info_feature,bert_long, bert_short], -1)

# feature_res = self.residual(feature)

return self.projection(feature)

The next part is to generate a mask and ID

for bug_id in batch_bugs:

bug = pickle.load(open(os.path.join(’/content/drive/My Drive/DuplicateBugFinder/openOffice/bugs’, ‘{}.pkl’.format(bug_id)), ‘rb’))

desc_word.append(bug[‘description_long’][0:500])

short_desc_word.append(bug[‘description_short’])

info_ = np.concatenate((

to_one_hot(bug[‘bug_severity’], info_dict[‘bug_severity’]),

to_one_hot(bug[‘bug_status’], info_dict[‘bug_status’]),

to_one_hot(bug[‘component’], info_dict[‘component’]),

to_one_hot(bug[‘priority’], info_dict[‘priority’]),

to_one_hot(bug[‘product’], info_dict[‘product’]),

to_one_hot(bug[‘version’], info_dict[‘version’])))

info.append(info_)

encoded_long = []

encoded_short = []

for input_long in desc_word:

encoded_input_long = tokenizer.encode(input_long,add_special_tokens = True,)

encoded_long.append(encoded_input_long)

input_ids_long = pad_sequences(encoded_long, maxlen=500, dtype=“long”,value=0, truncating=“post”, padding=“post”)

attention_masks_long = []

for sent in input_ids_long :

att_mask = [int(token_id > 0) for token_id in sent]

attention_masks_long.append(att_mask)

attention_masks_long = torch.tensor( attention_masks_long).cuda()

encoded_long = torch.tensor( input_ids_long ).cuda()

for input_short in short_desc_word:

encoded_input_short = tokenizer.encode(input_short,add_special_tokens = True,)

encoded_short.append(encoded_input_short)

input_ids_short = pad_sequences(encoded_short, maxlen=30, dtype=“long”,value=0, truncating=“post”, padding=“post”)

attention_masks_short = []

for sent in input_ids_short :

att_mask = [int(token_id > 0) for token_id in sent]

attention_masks_short.append(att_mask)

attention_masks_short = torch.tensor(attention_masks_short).cuda()

encoded_short = torch.tensor(input_ids_short).cuda()

info = torch.from_numpy(np.array(info)).type(dtype)cuda()

batch_bugs = dict()

batch_bugs[‘info’] = info

batch_bugs[‘desc’] = (encoded_long,attention_masks_long)

batch_bugs[‘short_desc’] = (encoded_short,attention_masks_short)

return batch_bugs