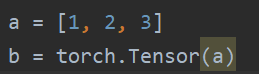

let a=[1,2,3], then i let b=torch.Tensor(a) , my pycharm’s background become yellow like that

is there exist a elegent way to convert a list to a tensor? or is my ide’s fault?

Convert list to tensor using this

a = [1, 2, 3]

b = torch.FloatTensor(a)

Your method should also work but you should cast your datatype to float so you can use it in a neural net

Hi,

First of all, PyCharm or most of IDEs cannot really analysis libraries like PyTorch which has C++ backend and Python frontend so it is normal to get warning or missing errors but your codes works fine.

But about your question:

When you are on GPU, torch.Tensor() will convert your data type to Float. Actually, torch.Tensor and torch.FloatTensor both do same thing.

But I think better way is using torch.tensor() (note the case of ‘t’ character). It converts your data to tensor but retains data type which is crucial in some methods. You may know that PyTorch and numpy are switchable to each other so if your array is int, your tensor should be int too unless you explicitly change type.

But on top of all these, torch.tensor is convention because you can define following variables:

device, dtype, requires_grad, etc.

Note: using torch.tensor() allocates new memory to copy the data of tensor. So if you want to avoid copying, use torch.as_tensor(numpy_ndarray).

Bests

Nik

1000 thx! You reply really help me a lot!

You are welcome mate!

Something about @zimmer550 answer that you need to convert to float to use your tensors in NN, is a rule of thumb, so in some cases like methods available in functional package etc, you need Long data type etc. So best approach is to retain the data type as it is and change it explicitly when you to enable you debug much faster when data type inconsistency exists.

In PyCharm, Ctrl+Q (I use my config from 2017 so it may be changed.) shows the documentation within your editor and always I recommend you to read it fully. There are many notes that can save you a lot of memory or runtime computation by only using a argument or triggering a function etc.

I am doing something very similar but I have a (nested) list of tensors. The simplest example I have is the following:

import torch

# trying to convert a list of tensors to a torch.tensor

x = torch.randn(3, 1)

xs = [x, x]

# xs = torch.tensor(xs)

xs = torch.as_tensor(xs)

but I get the following error:

x = torch.randn(3, 1)

xs = [x, x]

# xs = torch.tensor(xs)

xs = torch.as_tensor(xs)

Traceback (most recent call last):

File "/Users/brando/anaconda3/envs/automl-meta-learning/lib/python3.8/site-packages/IPython/core/interactiveshell.py", line 3343, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "<ipython-input-30-f846b7bfae21>", line 4, in <module>

xs = torch.as_tensor(xs)

ValueError: only one element tensors can be converted to Python scalars

any ideas what is going on? Btw, both give the same error.

I guess the following works but I am unsure what is wrong with this solution:

# %%

import torch

# trying to convert a list of tensors to a torch.tensor

x = torch.randn(3)

xs = [x.numpy(), x.numpy()]

# xs = torch.tensor(xs)

xs = torch.as_tensor(xs)

print(xs)

print(xs.size())

# %%

import torch

# trying to convert a list of tensors to a torch.tensor

x = torch.randn(3)

xs = [x.numpy(), x.numpy(), x.numpy()]

xs = [xs, xs]

# xs = torch.tensor(xs)

xs = torch.as_tensor(xs)

print(xs)

print(xs.size())

output:

import torch

# trying to convert a list of tensors to a torch.tensor

x = torch.randn(3)

xs = [x.numpy(), x.numpy(), x.numpy()]

xs = [xs, xs]

# xs = torch.tensor(xs)

xs = torch.as_tensor(xs)

print(xs)

print(xs.size())

tensor([[[0.3423, 1.6793, 0.0863],

[0.3423, 1.6793, 0.0863],

[0.3423, 1.6793, 0.0863]],

[[0.3423, 1.6793, 0.0863],

[0.3423, 1.6793, 0.0863],

[0.3423, 1.6793, 0.0863]]])

torch.Size([2, 3, 3])

Hi,

I think torch.tensor — PyTorch 1.7.0 documentation and torch.as_tensor — PyTorch 1.7.0 documentation have explained the difference clearly but in summary, torch.tensor always copies the data but torch.as_tensor tries to avoid that! In both cases, they don’t accept sequence of tensors.

The more intuitive way is stacking in a given dimension which you can find here: How to turn a list of tensor to tensor? - PyTorch Forums

The problem with your approach is that you convert your tensors to numpy, then you will lose grads and break computational graph but stacking preserves it.

Actually you have posted an answer similar that issue too!  How to turn a list of tensor to tensor? - #10 by Brando_Miranda

How to turn a list of tensor to tensor? - #10 by Brando_Miranda

hahaha, yea I see I didn’t make a very solid memory/understanding when I wrote that last year (or it’s been to long since?). Perhaps I can finally sort this out in my head, though in my defence, there does seem to be a lot of discussions surrounding this topic (which isn’t helping to digest this):

- How to convert array to tensor? - #25 by mehran2020

- How to turn a list of tensor to tensor? - #9 by Nithin_Vasisth

- How to turn a list of tensor to tensor? - #10 by Brando_Miranda

- Converting list to tensor - #8 by Brando_Miranda

- python - What's the difference between torch.stack() and torch.cat() functions? - Stack Overflow

- How to make really empty tensor? - #3 by 1414b35e42c77e0a57dd

it seems that the best pytorchthoning solution comes from either knowing torch.cat or torch.stack. In my use case I generate tensors and conceptually need to nest them in lists and eventually convert that to a final tensor (e.g. of size [d1, d2, d3]). I think the easiest solution to my problem append things to a list and then give it to torch.stack to form the new tensor then append that to a new list and then convert that to a tensor by again using torch.stack recursively.

For a non recursive example I think this works…will update with a better example in a bit:

# %%

import torch

# stack vs cat

# cat "extends" a list in the given dimension e.g. adds more rows or columns

x = torch.randn(2, 3)

print(f'{x.size()}')

# add more rows (thus increasing the dimensionality of the column space to 2 -> 6)

xnew_from_cat = torch.cat((x, x, x), 0)

print(f'{xnew_from_cat.size()}')

# add more columns (thus increasing the dimensionality of the row space to 3 -> 9)

xnew_from_cat = torch.cat((x, x, x), 1)

print(f'{xnew_from_cat.size()}')

print()

# stack serves the same role as append in lists. i.e. it doesn't change the original

# vector space but instead adds a new index to the new tensor, so you retain the ability

# get the original tensor you added to the list by indexing in the new dimension

xnew_from_stack = torch.stack((x, x, x, x), 0)

print(f'{xnew_from_stack.size()}')

xnew_from_stack = torch.stack((x, x, x, x), 1)

print(f'{xnew_from_stack.size()}')

xnew_from_stack = torch.stack((x, x, x, x), 2)

print(f'{xnew_from_stack.size()}')

# default appends at the from

xnew_from_stack = torch.stack((x, x, x, x))

print(f'{xnew_from_stack.size()}')

print('I like to think of xnew_from_stack as a \"tensor list\" that you can pop from the front')

print()

lst = []

print(f'{x.size()}')

for i in range(10):

x += i # say we do something with x at iteration i

lst.append(x)

# lstt = torch.stack([x for _ in range(10)])

lstt = torch.stack(lst)

print(lstt.size())

print()

Update: With nested list of the same size

def tensorify(lst):

"""

List must be nested list of tensors (with no varying lengths within a dimension).

Nested list of nested lengths [D1, D2, ... DN] -> tensor([D1, D2, ..., DN)

:return: nested list D

"""

# base case, if the current list is not nested anymore, make it into tensor

if type(lst[0]) != list:

if type(lst) == torch.Tensor:

return lst

elif type(lst[0]) == torch.Tensor:

return torch.stack(lst, dim=0)

else: # if the elements of lst are floats or something like that

return torch.tensor(lst)

current_dimension_i = len(lst)

for d_i in range(current_dimension_i):

tensor = tensorify(lst[d_i])

lst[d_i] = tensor

# end of loop lst[d_i] = tensor([D_i, ... D_0])

tensor_lst = torch.stack(lst, dim=0)

return tensor_lst

here is a few unit tests (I didn’t write more tests but it worked with my real code so I trust it’s fine. Feel free to help me by adding more tests if you want):

def test_tensorify():

t = [1, 2, 3]

print(tensorify(t).size())

tt = [t, t, t]

print(tensorify(tt))

ttt = [tt, tt, tt]

print(tensorify(ttt))

if __name__ == '__main__':

test_tensorify()

print('Done\a')

When nested list are variable length

related for variable length:

- python - converting list of tensors to tensors pytorch - Stack Overflow

- Nested list of variable length to a tensor

I didn’t read those very carefully but I assume they must be padding somehow (probably need to calculate the largest length/dimension to padd is my guess and then do some sort of recursion like I did above).

If someone is looking into the performance aspects of this, I’ve done a small experiment. In my case, I needed to convert a list of scalar tensors into a single tensor.

import torch

torch.__version__ # 1.10.2

x = [torch.randn(1) for _ in range(10000)]

torch.cat(x).shape, torch.stack(x).shape # torch.Size([10000]), torch.Size([10000, 1])

%timeit torch.cat(x) # 1.5 ms ± 476 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

%timeit torch.cat(x).reshape(-1,1) # 1.95 ms ± 534 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

%timeit torch.stack(x) # 5.36 ms ± 643 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

My conclusion is that even if you want to have the additional dimension of torch.stack, using torch.cat and then reshape is better.

Works, but in many other cases torch.FloatTensor(a) produces

TypeError: expected TensorOptions(dtype=float, device=cpu ...

Therefore, often Nikronic’s answer is better: torch.tensor()

Sometimes: torch.from_numpy