Hi, how do we check for graph breaks when using torch.compile() ? I used tlparse to get the logs but the logs ended up with Metrics were missing . The training goes fine when using torch.compile(model, fullgraph=True) , does this mean there are no graph breaks?

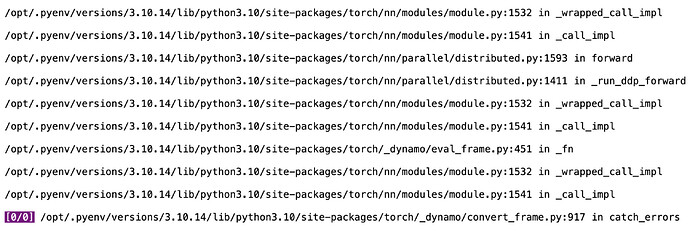

Hmm, this usually means that compile crashed midway through, before it finished dumping logs to tlparse.

A few questions:

(1) Does your program crash (with a stacktrace) when you run it?

(2) I think tlparse is generally great for debugging. Another lighter weight option for graph breaks specifically is running with TORCH_LOGS=graph_breaks. Does that tell you anything?

(3) finally, if you think your error is easily reproable (e.g by cloning a GitHub repro and installing dependencies), you can file a issue on PyTorch GitHub and I or someone can take a look

- Program doesn’t crash with any errors. I do get decent performance gains from

torch.compile(model, fullgraph=True)

tlparse /tmp/tracedir/dedicated_log_torch_trace_rank_0_jk_xflq1.log -o ~/tracedir-out/

Detected rank: Some(0)

[00:00:00] [##################################################################################################################################################################################################################################################################################################################################################] 6.94 MiB/6.94 MiB [192.78 MiB/s] (0s)

Stats { ok: 759, other_rank: 0, fail_glog: 0, fail_json: 0, fail_payload_md5: 0, fail_dynamo_guards_json: 0, fail_parser: 0, unknown: 0 } Stats { ok: 760, other_rank: 0, fail_glog: 0, fail_json: 0, fail_payload_md5: 0, fail_dynamo_guards_json: 0, fail_parser: 0, unknown: 0 }

TORCH_LOGS=graph_breaksdoesn’t give any informative things, only log I get is the following

[rank1]:W0321 23:10:13.467000 457758 site-packages/torch/_dynamo/backends/distributed.py:89] [0/0] Some buckets were extended beyond their requested parameter capacities in order to ensure each subgraph has an output node, required for fx graph partitioning. This can be the case when a subgraph would have only contained nodes performing inplace mutation, and returning no logical outputs. This should not be a problem, unless it results in too few graph partitions for optimal DDP performance.

[rank0]:W0321 23:10:13.493000 457757 site-packages/torch/_dynamo/backends/distributed.py:89] [0/0] Some buckets were extended beyond their requested parameter capacities in order to ensure each subgraph has an output node, required for fx graph partitioning. This can be the case when a subgraph would have only contained nodes performing inplace mutation, and returning no logical outputs. This should not be a problem, unless it results in too few graph partitions for optimal DDP performance.

[rank1]:W0321 23:10:13.558000 457758 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] DDPOptimizer extended these buckets to ensure per-subgraph output nodes:

[rank1]:W0321 23:10:13.558000 457758 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] ┌─────────┬─────────────┬────────────────────────┐

[rank1]:W0321 23:10:13.558000 457758 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] │ Index │ Extra Ops │ Extra Param Size (b) │

[rank1]:W0321 23:10:13.558000 457758 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] ├─────────┼─────────────┼────────────────────────┤

[rank1]:W0321 23:10:13.558000 457758 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] │ 1 │ 1 │ 0 │

[rank1]:W0321 23:10:13.558000 457758 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] └─────────┴─────────────┴────────────────────────┘

[rank0]:W0321 23:10:13.574000 457757 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] DDPOptimizer extended these buckets to ensure per-subgraph output nodes:

[rank0]:W0321 23:10:13.574000 457757 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] ┌─────────┬─────────────┬────────────────────────┐

[rank0]:W0321 23:10:13.574000 457757 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] │ Index │ Extra Ops │ Extra Param Size (b) │

[rank0]:W0321 23:10:13.574000 457757 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] ├─────────┼─────────────┼────────────────────────┤

[rank0]:W0321 23:10:13.574000 457757 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] │ 1 │ 1 │ 0 │

[rank0]:W0321 23:10:13.574000 457757 site-packages/torch/_dynamo/backends/distributed.py:106] [0/0] └─────────┴─────────────┴────────────────────────┘

/opt/.pyenv/versions/3.10.14/lib/python3.10/site-packages/torch/_inductor/lowering.py:1713: UserWarning: Torchinductor does not support code generation for complex operators. Performance may be worse than eager.

warnings.warn(

/opt/.pyenv/versions/3.10.14/lib/python3.10/site-packages/torch/_inductor/lowering.py:1713: UserWarning: Torchinductor does not support code generation for complex operators. Performance may be worse than eager.

warnings.warn(

- I do have a complex codebase, I can try minifying tool but from past PyTorch issues it seems like it doesn’t work well.

Is it is compiling/running successfully with fullgraph=True then there isn’t any graph breaks?

@jjjj It does compile successfully with fullgraph=True

jjjj is right- this means that your code is compiling without any graph breaks. Is there another question here?