Hello!

*Disclaimer, my first post to stackoverflow is coming to mind where I got flamed for poor formatting, I’m sorry ahead of time if I broke a formatting rule.

I am creating a neural network model to mimic three tasks used for clinical assessments in stroke survivors. The data structures of the model are word embeddings from gloves’ wiki-gigaworld and the output are a series of numbers reflecting the letters needed to spell the word that coincides with each vector.

The three tasks I am modeling are a meaning to word task, a word repetition task, and a task matching a word to its meaning (the opposite of the first task). Note, that I am NOT using audio files or images. I have previously created this model with LENS (light efficient network simulator), but am transitioning to python for the ease of data prep, implementation, etc.

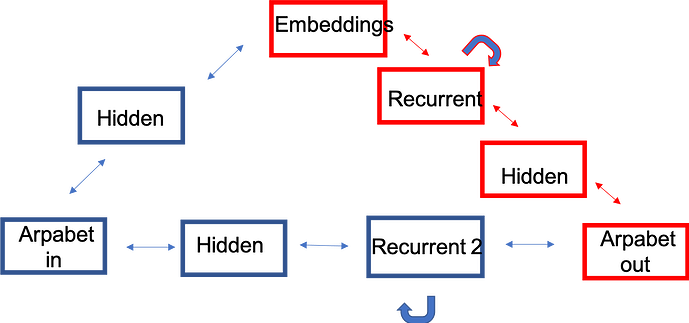

I am providing a picture of the network I am trying to create. Where I have labeled arpab![pytorch example et_in/out represents the letter/number sequences that are used to represent each word, and embeddings are the word embeddings from glove.

The code I am providing reflects part of the model I am trying to create, which is colored in red in the image. It works! My question is 1) can I add the remaining structure (colored in blue) of my intended network when i define the bidirectional network? If so, can anyone provide any guidance as to how I can complete this task or even point me in the direction of some code resources to aid in the process?

class BiDir(torch.nn.Module):

def __init__(self, weights, emb_dim, hid_dim, rnn_num_layers=2):

super().__init__()

#Embedding layers using glove as the pretrained weights

self.embedding = nn.Embedding.from_pretrained(weights)

#Bidirectional GRU module for forward pass with 2 hidden layers

self.rnn = torch.nn.GRU(emb_dim, hid_dim, bidirectional=True, num_layers=rnn_num_layers)

self.l1 = torch.nn.Linear(hid_dim * 2 * rnn_num_layers, 256)

self.l2 = torch.nn.Linear(256, 2)

def forward(self, samples):

#Forward Pass

embedded = self.embedding(samples)

_, last_hidden = self.rnn(embedded)

hidden_list = [last_hidden[i, :, :] for i in range(last_hidden.shape[0])]

#Calculating the loss

encoded = torch.cat(hidden_list, dim=1)

#RELU and Sigmoid Activation Function

encoded = torch.nn.functional.relu(self.l1(encoded))

encoded = torch.nn.functional.sigmoid(torch.FloatTensor(self.l2(encoded)))

return encoded

#weights = pretrained embeddings of length 300

#1392 words in current model

model = BiDir( weights, 300, 1392, rnn_num_layers=2)

criterion = torch.nn.MultiLabelSoftMarginLoss()

optimizer = torch.optim.Adam(model.parameters())

TEXT.vocab.stoi

TEXT.vocab.itos[50]

for epoch in range(1):

#Running the model for 20 epochs

for batch in dataset_iter:

optimizer.zero_grad()

output = model(batch.text)

loss = criterion(output, batch.label)

loss.backward()

optimizer.step()

with torch.no_grad():

acc = torch.abs(output - batch.label).view(-1)

#acc = acc.sum() / acc.size()[0] * 100.

#Calculating the accuracy

acc = (1. - acc.sum() / acc.size()[0]) * 100.

print(f'Epoch({epoch+1}) loss: {loss.item()}, accuracy: {acc:.1f}%')