Binary classification using LSTM, loss keeps going up

The input x_train is 293141,x_test is 23141,y_train is 29311,y_test is 2311,x_train is from a certain stock MA,MACD,KDJ and other 14 indicators. The following LSTM model is used for classification prediction, but the loss keeps going up no matter what the learning rate is set, what is wrong?

import torch

import torch.nn as nn

import numpy as np

import matplotlib.pyplot as plt

import csv

import pandas as pd

from torch.utils.data import TensorDataset,DataLoader

from sklearn.preprocessing import StandardScaler

class LSTM(nn.Module):

def __init__(self, timesteps, batch_size, input_size, hidden_size, output_size):

super(LSTM, self).__init__()

self.timesteps = timesteps;

self.batch_size = batch_size;

self.input_size = input_size;

self.hidden_size = hidden_size;

self.output_size = output_size;

self.Wfh,self.Wfx,self.bf = self.Weight_bias(self.input_size,self.hidden_size);

self.Wih,self.Wix,self.bi = self.Weight_bias(self.input_size,self.hidden_size);

self.Woh,self.Wox,self.bo = self.Weight_bias(self.input_size,self.hidden_size);

self.Wch,self.Wcx,self.bc = self.Weight_bias(self.input_size,self.hidden_size);

self.Wp = torch.randn(self.output_size,self.hidden_size);

self.bp = torch.randn(self.output_size);

self.f = torch.zeros(self.batch_size, self.hidden_size, requires_grad=True);

self.i = torch.zeros(self.batch_size, self.hidden_size, requires_grad=True);

self.o = torch.zeros(self.batch_size, self.hidden_size, requires_grad=True);

self.ct = torch.zeros(self.batch_size, self.hidden_size, requires_grad=True);

self.h = torch.zeros(self.batch_size, self.hidden_size, requires_grad=True);

self.c = torch.zeros(self.batch_size, self.hidden_size, requires_grad=True);

self.fList = [];

self.iList = [];

self.oList = [];

self.ctList = [];

self.hList = [];

self.cList = [];

self.preList = [];

self.fList.append(self.f);

self.iList.append(self.i);

self.oList.append(self.o);

self.ctList.append(self.ct);

self.hList.append(self.h);

self.cList.append(self.c);

self.prediction = nn.Linear(hidden_size, output_size);

self.sigmoid = nn.Sigmoid();

def Weight_bias(self, input_size, hidden_size):

return (nn.Parameter(torch.randn(hidden_size, hidden_size) * 0.01),

nn.Parameter(torch.randn(input_size, hidden_size) * 0.01),

nn.Parameter(torch.randn(hidden_size) * 0.01));

def forward(self, x):

for i in range(len(x)):

self.f = torch.sigmoid(self.hList[-1] @ self.Wfh + x[i] @ self.Wfx + self.bf);

self.i = torch.sigmoid(self.hList[-1] @ self.Wih + x[i] @ self.Wix + self.bi);

self.o = torch.sigmoid(self.hList[-1] @ self.Woh + x[i] @ self.Wox + self.bo);

self.ct = torch.tanh(self.hList[-1] @ self.Wch + x[i] @ self.Wcx + self.bc);

self.c = self.f * self.cList[-1] + self.i * self.ct;

self.h = self.o * torch.tanh(self.c);

self.fList.append(self.f);

self.iList.append(self.i);

self.oList.append(self.o);

self.ctList.append(self.ct);

self.hList.append(self.h);

self.cList.append(self.c);

# sigmoid

return self.sigmoid(self.prediction(self.h));

def reset(self):

self.hList = [torch.zeros(self.batch_size, self.hidden_size, requires_grad=True)];

self.fList = [torch.zeros(self.batch_size, self.hidden_size, requires_grad=True)];

self.iList = [torch.zeros(self.batch_size, self.hidden_size, requires_grad=True)];

self.oList = [torch.zeros(self.batch_size, self.hidden_size, requires_grad=True)];

self.ctList = [torch.zeros(self.batch_size, self.hidden_size, requires_grad=True)];

self.cList = [torch.zeros(self.batch_size, self.hidden_size, requires_grad=True)];

def LSTMNeural(x_train,y_train,x_test,y_test):

timesteps = 1;

L = 1;

batch_size = 1;

Epoch = 50;

# train

l = LSTM(timesteps, batch_size, 14, 1, 1);

lossList = [];

criterion = nn.BCELoss(); # Binary problem, otherwise error:IndexError: Target 1 is out of bounds. CrossEntropyLoss:Multicategorization problem

optimizer = torch.optim.SGD(l.parameters(), lr= 1e-4);

for epoch in range(Epoch):

for i in range(len(x_train)):

# print("x_train[i].shape:",x_train[i].shape); # torch.Size([14, 1])

# print("y_train[i].shape:",y_train[i].shape); # torch.Size([1])

x = x_train[i].view(1,14).unsqueeze(1);

out = l(x);

loss = criterion(out, y_train[i].unsqueeze(1));

optimizer.zero_grad();

loss.backward();

optimizer.step();

l.reset();

lossList.append(loss.item());

if (epoch % 10 == 0):

print(f"Epoch {epoch},loss:{loss.item()}");

plt.plot(lossList);

plt.title("Loss");

plt.xlabel("Epoch");

plt.ylabel("loss");

plt.show();

resultList = [];

for i in range(len(x_test)):

x = x_test[i].view(1,14).unsqueeze(1);

# forward propagation

pre = l.forward(x);

resultList.append(pre);

# Forecast Results Showcase

result = [];

for i in range(len(resultList)):

result.append(torch.squeeze(resultList[i], dim=0).detach().numpy())

threshold = 0.5

result_binary = [1 if item > threshold else 0 for item in result]

test_data = [item.numpy() for item in y_test]

plt.plot(np.array(test_data), label="Ground Truth", marker='o')

plt.plot(np.array(result_binary), label="Predictions", marker='s')

plt.legend()

plt.show()

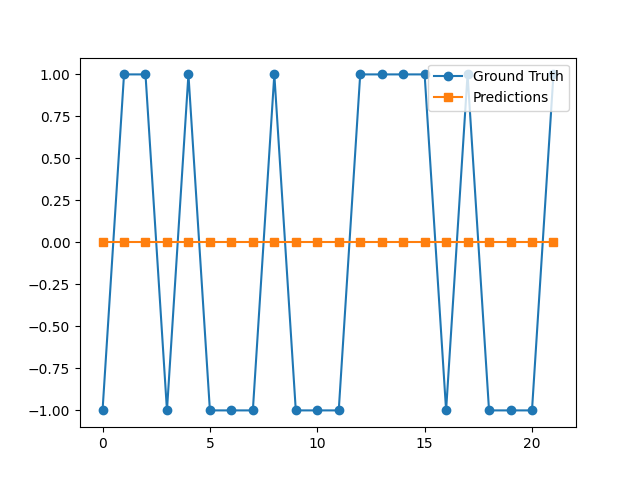

The prediction results in a straight line: