=== Software ===

python : 3.7.6

fastai : 1.0.60

fastprogress : 0.2.2

torch : 1.4.0

torch cuda : 10.1

Hi all,

I’m just a beginner. I have a problem.

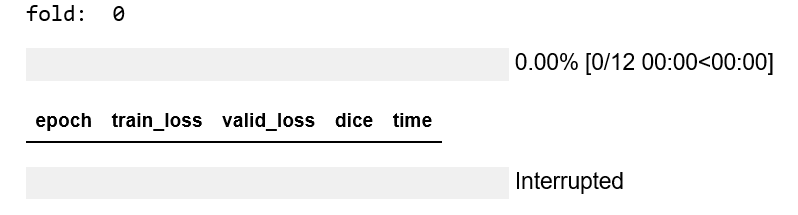

Problem: BrokenPipeError: [Errno 32] Broken pipe when it comes from 12th cell (In[12], jupyter notebook)

Error code:

learn.fit_one_cycle(6, lr, callbacks = [AccumulateStep(learn,n_acc)])

Code from In [12]:

scores, best_thrs = [],[]

for fold in range(nfolds):

print('fold: ', fold)

data = get_data(fold)

learn = unet_learner(data, models.resnet34, metrics=[dice])

learn.clip_grad(1.0);

set_BN_momentum(learn.model)

#fit the decoder part of the model keeping the encode frozen

lr = 1e-3

learn.fit_one_cycle(12, lr, callbacks = [AccumulateStep(learn,n_acc)])

#fit entire model with saving on the best epoch

learn.unfreeze()

learn.fit_one_cycle(12, slice(lr/80, lr/2), callbacks=[AccumulateStep(learn,n_acc)])

learn.save('fold'+str(fold));

#prediction on val and test sets

preds, ys = pred_with_flip(learn)

pt, _ = pred_with_flip(learn,DatasetType.Test)

if fold == 0: preds_test = pt

else: preds_test += pt

#convert predictions to byte type and save

preds_save = (preds*255.0).byte()

torch.save(preds_save, 'preds_fold'+str(fold)+'.pt')

np.save('items_fold'+str(fold), data.valid_ds.items)

#remove noise

preds[preds.view(preds.shape[0],-1).sum(-1) < noise_th,...] = 0.0

#optimal threshold

#The best way would be collecting all oof predictions followed by a single threshold

#calculation. However, it requres too much RAM for high image resolution

dices = []

thrs = np.arange(0.01, 1, 0.01)

for th in progress_bar(thrs):

preds_m = (preds>th).long()

dices.append(dice_overall(preds_m, ys).mean())

dices = np.array(dices)

scores.append(dices.max())

best_thrs.append(thrs[dices.argmax()])

if fold != nfolds-1: del preds, ys, preds_save

gc.collect()

torch.cuda.empty_cache()

preds_test /= nfolds

After running: BrokenPipeError Traceback (most recent call last)

BrokenPipeError Traceback (most recent call last)

<ipython-input-12-13acb8b35833> in <module>

10 #fit the decoder part of the model keeping the encode frozen

11 lr = 1e-3

---> 12 learn.fit_one_cycle(12, lr, callbacks = [AccumulateStep(learn,n_acc)])

13

14 #fit entire model with saving on the best epoch

~\anaconda3\lib\site-packages\fastai\train.py in fit_one_cycle(learn, cyc_len, max_lr, moms, div_factor, pct_start, final_div, wd, callbacks, tot_epochs, start_epoch)

21 callbacks.append(OneCycleScheduler(learn, max_lr, moms=moms, div_factor=div_factor, pct_start=pct_start,

22 final_div=final_div, tot_epochs=tot_epochs, start_epoch=start_epoch))

---> 23 learn.fit(cyc_len, max_lr, wd=wd, callbacks=callbacks)

24

25 def fit_fc(learn:Learner, tot_epochs:int=1, lr:float=defaults.lr, moms:Tuple[float,float]=(0.95,0.85), start_pct:float=0.72,

~\anaconda3\lib\site-packages\fastai\basic_train.py in fit(self, epochs, lr, wd, callbacks)

198 else: self.opt.lr,self.opt.wd = lr,wd

199 callbacks = [cb(self) for cb in self.callback_fns + listify(defaults.extra_callback_fns)] + listify(callbacks)

--> 200 fit(epochs, self, metrics=self.metrics, callbacks=self.callbacks+callbacks)

201

202 def create_opt(self, lr:Floats, wd:Floats=0.)->None:

~\anaconda3\lib\site-packages\fastai\basic_train.py in fit(epochs, learn, callbacks, metrics)

97 cb_handler.set_dl(learn.data.train_dl)

98 cb_handler.on_epoch_begin()

---> 99 for xb,yb in progress_bar(learn.data.train_dl, parent=pbar):

100 xb, yb = cb_handler.on_batch_begin(xb, yb)

101 loss = loss_batch(learn.model, xb, yb, learn.loss_func, learn.opt, cb_handler)

~\anaconda3\lib\site-packages\fastprogress\fastprogress.py in __iter__(self)

45 except Exception as e:

46 self.on_interrupt()

---> 47 raise e

48

49 def update(self, val):

~\anaconda3\lib\site-packages\fastprogress\fastprogress.py in __iter__(self)

39 if self.total != 0: self.update(0)

40 try:

---> 41 for i,o in enumerate(self.gen):

42 if i >= self.total: break

43 yield o

~\anaconda3\lib\site-packages\fastai\basic_data.py in __iter__(self)

73 def __iter__(self):

74 "Process and returns items from `DataLoader`."

---> 75 for b in self.dl: yield self.proc_batch(b)

76

77 @classmethod

~\anaconda3\lib\site-packages\torch\utils\data\dataloader.py in __iter__(self)

277 return _SingleProcessDataLoaderIter(self)

278 else:

--> 279 return _MultiProcessingDataLoaderIter(self)

280

281 @property

~\anaconda3\lib\site-packages\torch\utils\data\dataloader.py in __init__(self, loader)

717 # before it starts, and __del__ tries to join but will get:

718 # AssertionError: can only join a started process.

--> 719 w.start()

720 self._index_queues.append(index_queue)

721 self._workers.append(w)

~\anaconda3\lib\multiprocessing\process.py in start(self)

110 'daemonic processes are not allowed to have children'

111 _cleanup()

--> 112 self._popen = self._Popen(self)

113 self._sentinel = self._popen.sentinel

114 # Avoid a refcycle if the target function holds an indirect

~\anaconda3\lib\multiprocessing\context.py in _Popen(process_obj)

221 @staticmethod

222 def _Popen(process_obj):

--> 223 return _default_context.get_context().Process._Popen(process_obj)

224

225 class DefaultContext(BaseContext):

~\anaconda3\lib\multiprocessing\context.py in _Popen(process_obj)

320 def _Popen(process_obj):

321 from .popen_spawn_win32 import Popen

--> 322 return Popen(process_obj)

323

324 class SpawnContext(BaseContext):

~\anaconda3\lib\multiprocessing\popen_spawn_win32.py in __init__(self, process_obj)

87 try:

88 reduction.dump(prep_data, to_child)

---> 89 reduction.dump(process_obj, to_child)

90 finally:

91 set_spawning_popen(None)

~\anaconda3\lib\multiprocessing\reduction.py in dump(obj, file, protocol)

58 def dump(obj, file, protocol=None):

59 '''Replacement for pickle.dump() using ForkingPickler.'''

---> 60 ForkingPickler(file, protocol).dump(obj)

61

62 #

BrokenPipeError: [Errno 32] Broken pipe

Source code: Hypercolumns pneumothorax fastai [0.831 LB] | Kaggle

Thanks all.