The pred and targes should be on cpu or gpu?

Here is my implementation:

def pytorch_ctc_loss(pre, gt, gtlen):

"""

Input:

pre : (T,B,C), FloatTensor

gt : (sum(target_lengths)), IntTensor

gtlen : B, IntTensor

"""

pre = F.log_softmax(pre, dim=-1)

prlen = torch.IntTensor([pre.size(0)]*pre.size(1))

return F.ctc_loss(pre, gt.cpu(), prlen, gtlen.cpu(), blank=0)

Where “pre” is on cuda.

Mostly, this function works fine, but it will SOMETIMES throw:

RuntimeError: Tensor for argument #2 'targets' is on CPU, but expected it to be on GPU (while checking arguments for ctc_loss_gpu)

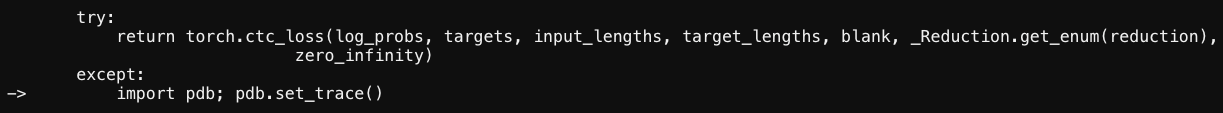

When the SOMETIMES happend, I pdb it,

and find:

(Pdb) p log_probs.shape

torch.Size([14, 2, 95])

(Pdb) p targets.shape

torch.Size([20])

(Pdb) p input_lengths

tensor([14, 14], dtype=torch.int32)

(Pdb) p target_lengths

tensor([16, 4], dtype=torch.int32)

(Pdb) p targets

tensor([41, 78, 84, 69, 82, 67, 79, 77, 77, 85, 78, 73, 67, 65, 84, 69, 16, 24,

18, 17], dtype=torch.int32)

(Pdb) p log_probs

tensor([[[-1.6399, -5.8873, -5.7742, ..., -5.3770, -6.8403, -5.3823],

[-2.8857, -5.2404, -5.5605, ..., -4.9667, -5.4475, -5.8055]],

[[-2.0398, -5.3816, -4.9946, ..., -5.6343, -6.3017, -5.2780],

[-2.8713, -4.9202, -4.8566, ..., -4.7667, -5.6632, -5.3870]],

[[-3.1458, -5.6386, -5.0675, ..., -5.0270, -5.4826, -5.4561],

[-3.0797, -5.2278, -4.9986, ..., -4.5412, -5.1524, -5.6291]],

...,

[[-3.0120, -5.4773, -5.3341, ..., -4.6658, -5.0991, -5.6653],

[-1.7960, -6.1763, -6.0949, ..., -4.8088, -6.0141, -5.6578]],

[[-1.9499, -5.7898, -5.2879, ..., -4.7644, -5.2784, -5.6835],

[-0.8562, -6.6550, -5.9762, ..., -4.9032, -6.6171, -5.8010]],

[[-1.2654, -6.1024, -5.7864, ..., -4.8103, -5.6454, -6.5198],

[-0.7837, -6.3323, -5.9075, ..., -5.6405, -6.6671, -6.6456]]],

device='cuda:0', grad_fn=<LogSoftmaxBackward>)

I can’t see any problem in here.