Do we always need to calculate this 6444 manually using formula, i think there might be some optimal way of finding the last features to be passed on to the Fully Connected layers otherwise it could become quiet cumbersome to calculate for thousands of layers.

Right now im doing it manually for every layer like first calculating the dimension of images then calculating the output of convolved layer then maxpool layer and so on to find out the last features.

Guide me for optimal ways. Please.

Hi,

Let’s say you have already implemented your model, but you do not know what is the proper input number of neurons for linear layer.

The manual approach would be like this:

You can run your model and add a print(x.shape) in forward function right after self.pool. It will print final shape something like [batch, channel, height, width]. So, you just multiply them as the number of inputs.

About automatic way, you can do this way:

class Net(nn.Module):

def __init__(self):

...

def forward(self, x):

...

x = ... # let's say x is the output of self.pool

self.linear_input_size = x.view(-1, x.shape[-1]).shape[0]

...

This thread may be able to help.

bests

Thank you very Much @Nikronic this helps a lot it took me almost 2 days to find a solution something like this

You are welcome! I hope you solve other possible issues much faster.

Good luck

Thanks @Nikronic also i wanted like can i use nn.AdaptiveAveragePool1D(argument) as an alternative to it , im not sure if i can althoguh i will use what u suggested.

Thank You.

You are absolutely right, that is another possible solution!

Although, as in your post, the model contains a nn.MaxPool2d, you may want to use same pooling layer.

On top of that, before the Linear layer, the model is still using images so 2d pooling is needed.

So, The alternative is nn.AdaptiveMaxPool2d. See its documentation.

The benefit of using Adaptive pooling layer is that you explicitly define your desired output size so not matter what input size is, model always will produce tensors with the identical shape. Also, it is has been used in official PyTorch implementation of ResNet models right before Linear layer. Please see this post.

Thanks @Nikronic brother u are such an awesome person i must say, u helped me in a great way and u don’t know how much respect i have for you.

Please bear with me on this PyTorch Journey.

Thank You.

Thank you so much! I really did not expect that. I am really appreciated.

Actually, I am trying to do same (at least) thing other great members/developers of PyTorch have done to me.

Feel free to ask your questions whenever you have any issues!

Bests

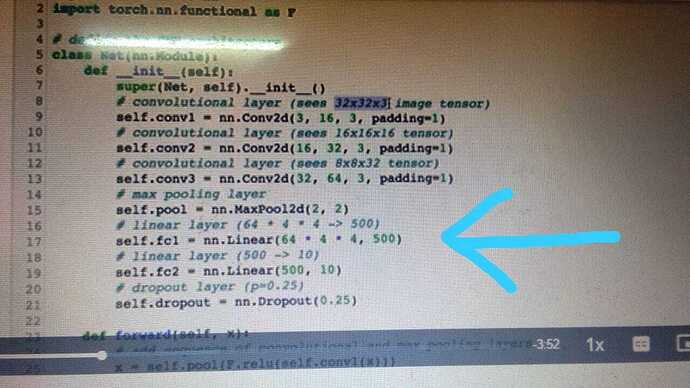

hey @Nikronic so i was trying to implement it the way u told me but im kind of stuck like how can i pass the output from maxpool layers just the way u told me.

how can i write it further more , like conventionally i should have written inside

self.fc1 = nn.Linear(6444 ,500 ) but since i don’t want to calculate the image dimension 4* 4 manually as i said

Using which one of those methods?

- Printing shape

- Extracting shape by passing one arbitrary tensor

- Using

AdaptiveMaxPool2d

like what to add in nn.Linear() in the above code such that it automatically calculates the 64 * 4 * 4 for me in the first fully connected layer

If your input images are have same size, I go with print method. It will just take few seconds and as the input size is same all the time, you do not need to change the input to linear layer. You can also make sure that input is always in a particular size using transforms.Resize class.

class Test(nn.Module):

def __init__(self, pool_size=(4, 4)):

super(Test, self).__init__()

self.conv1 = nn.Conv2d(3, 16, 3, padding=1)

self.conv2 = nn.Conv2d(16, 32, 3, padding=1)

self.conv3 = nn.Conv2d(32, 64, 3, padding=1)

self.maxpool = nn.MaxPool2d(2, 2)

self.fc1 = nn.Linear(_____, 500) # fill it after one run of model

self.fc2 = nn.Linear(500, 10)

def forward(self, x):

out = F.relu(self.conv1(x))

out = F.relu(self.conv2(out))

out = F.relu(self.conv3(out))

out = self.maxpool(out)

print(out.shape) # use this to fill input to linear layer

out = out.view(out.size(0), -1)

out = self.fc1(out)

out = self.fc2(out)

return out

model = Test()

x = torch.randn(1, 3, 32, 32)

model(x)

But if you are using input with different sizes and do not want to resize them into a identical shape, I prefer AdaptiveMaxPool. In this case:

class Test(nn.Module):

def __init__(self, pool_size=(4, 4)):

super(Test, self).__init__()

self.conv1 = nn.Conv2d(3, 16, 3, padding=1)

self.conv2 = nn.Conv2d(16, 32, 3, padding=1)

self.conv3 = nn.Conv2d(32, 64, 3, padding=1)

self.maxpool = nn.AdaptiveMaxPool2d(pool_size)

self.fc1 = nn.Linear(64*pool_size[0]*pool_size[1], 500)

self.fc2 = nn.Linear(500, 10)

def forward(self, x):

out = F.relu(self.conv1(x))

out = F.relu(self.conv2(out))

out = F.relu(self.conv3(out))

out = self.maxpool(out)

out = out.view(out.size(0), -1)

out = self.fc1(out)

out = self.fc2(out)

return out

model = Test()

x = torch.randn(1, 3, 32, 32)

model(x)

Also it would be great if you could put the codes within two pair of ```

Thnaks @Nikronic for the same u are so kind i’m so sorry i could not reply earlier i had an interview earlier for a job which i lost unfortunately but thanks for all the hardships u did for me i owe you very much.

Thank you,

Don’t worry, you fill find an appropriate job soon. Just do not give up!

@Nikronic btw do u know about Fellowship Program i think u can the right candidate for same or is there anyway where i can DM you like Linkedin etc ?

Another good way to know the input dimensions of the image is to use torchsummary module for summary library.

import torch.nn as nn

import torch.nn.functional as F

from torchsummary import summary

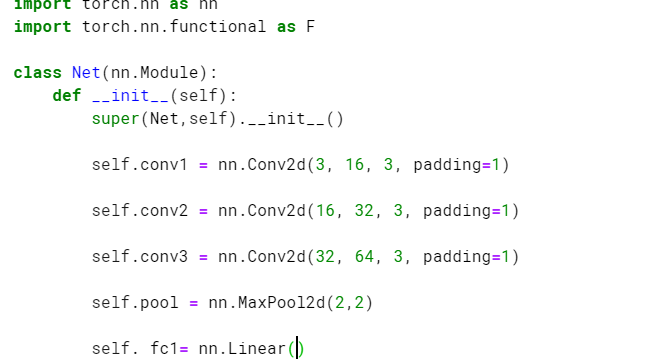

# define the CNN architecture

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

## Define layers of a CNN

self.conv1 = nn.Conv2d(3,16,3,padding=1)

self.conv2 = nn.Conv2d(16,32,3,padding=1)

self.conv3 = nn.Conv2d(32,64,3,padding=1)

self.conv4 = nn.Conv2d(64,128,3,padding=1)

self.conv5 = nn.Conv2d(128,256,3,padding=1)

self.batch_norm1 = nn.BatchNorm2d(16)

self.batch_norm2 = nn.BatchNorm2d(32)

self.batch_norm3 = nn.BatchNorm2d(64)

self.batch_norm4 = nn.BatchNorm2d(128)

self.batch_norm5 = nn.BatchNorm2d(256)

self.pool = nn.MaxPool2d(2)

self.dropout = nn.Dropout(p=0.2)

self.fc1 = nn.Linear(256*7*7,1000,bias=True)

self.fc2 = nn.Linear(1000,133)

def forward(self, x):

## Define forward behavior

x = F.relu(self.conv1(x))

x = self.batch_norm1(x)

x = self.pool(x)

x = F.relu(self.conv2(x))

x = self.pool(x)

x = self.batch_norm2(x)

x = F.relu(self.conv3(x))

x = self.pool(x)

x = self.batch_norm3(x)

x = F.relu(self.conv4(x))

x = self.pool(x)

x = self.batch_norm4(x)

x = F.relu(self.conv5(x))

x = self.pool(x)

x = self.batch_norm5(x)

x = x.view(-1,256*7*7)

x = self.dropout(x)

x = F.relu(self.fc1(x))

x = self.dropout(x)

x = self.fc2(x)

return x

Then you can use library as follows:

model_scratch = Net().to(use_cuda)

print(summary(model_scratch,(3,224,224)))

Which will generate a tensorflow type output highlighting changes after each layer. Something like:

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 16, 224, 224] 448

BatchNorm2d-2 [-1, 16, 224, 224] 32

MaxPool2d-3 [-1, 16, 112, 112] 0

Conv2d-4 [-1, 32, 112, 112] 4,640

MaxPool2d-5 [-1, 32, 56, 56] 0

BatchNorm2d-6 [-1, 32, 56, 56] 64

Conv2d-7 [-1, 64, 56, 56] 18,496

MaxPool2d-8 [-1, 64, 28, 28] 0

BatchNorm2d-9 [-1, 64, 28, 28] 128

Conv2d-10 [-1, 128, 28, 28] 73,856

MaxPool2d-11 [-1, 128, 14, 14] 0

BatchNorm2d-12 [-1, 128, 14, 14] 256

Conv2d-13 [-1, 256, 14, 14] 295,168

MaxPool2d-14 [-1, 256, 7, 7] 0

BatchNorm2d-15 [-1, 256, 7, 7] 512

Dropout-16 [-1, 12544] 0

Linear-17 [-1, 1000] 12,545,000

Dropout-18 [-1, 1000] 0

Linear-19 [-1, 133] 133,133

================================================================

Total params: 13,071,733

Trainable params: 13,071,733

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 22.51

Params size (MB): 49.86

Estimated Total Size (MB): 72.95

----------------------------------------------------------------

Hey @Arohan_Ajit good to see you it’s me Deepak Mehra do u remember haha world is just too small

Haha hi @mehra-deepak, I actually thought it maybe you, saw your name while going through pytorch weekly summary in mail and I looked into what it was. Cheers mate!

so good to see u answer my query that beautifully only @nikronic had stated me the soultion otherwise i had asked a lot of professional all said its manual haha u seem like a pro developer now good thing so native vibes

Lol, not really, I’m still exploring. Someone once told me and I’m always looking to forwards some tips and tricks to friends