Hi,

I saw some topics like this one

My case is little more complex, I simplify it:

I have data, some of them have common parts but not all. There is not common part for all data

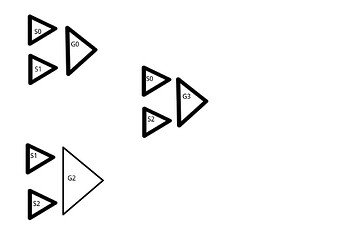

For example,

I have data of type A with features F0 … F6

data of type B with features F0…F3 and F7…F8

data of type C with features F6…F9

An illustration:

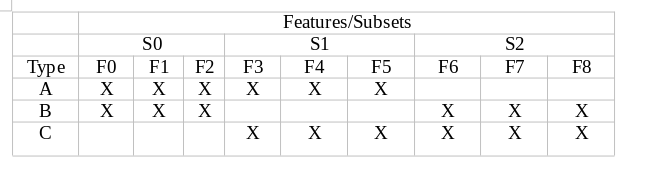

We can see all data have the same size. My idea is to do little neural networks for all subsets of features (S0 = F0…F3, S1 = F4…F6, S2 = F7…F8) and globals neurals networks (one by combinaison of two subsets Sn, in my little example, it means we have 3 globals networks) , this one takes the outputs of two little NN

An illustration:

As we can see on the topic on top, we can do

fc1 = nn.Linear(784, 500)

fc2 = nn.Linear(500, 10)

optimizer = torch.optim.SGD([fc1.parameters(), fc2.parameters()], lr=0.01)

but if we do that

S_one = get_network_subset(num_subset = 0)

S_two = get_network_subset(num_subset = 1)

Global = get_network_global(nums_subsets = (0,1))

optimizer = torch.optim.SGD([S_one.parameters(), S_two.parameters(), Global.parameters()], lr=0.01)

Will Pytorch understand how to do the backpropagation (global network has the “deepest” weights) or I have to respect a specific order to pass parameters ?

At the end I want to be able to collectivize learning between models and I don’t find resources about that