Here’s my problem, I’d like to compute and serialize all features obtained from CNN during training, that says, when the training is unfinished. Say, for every 5 epochs, I turn the model to eval mode to compute features, afterwards, I set model.train() to go back on to training. Is that correct way to do so? I notice an odd phenomenon by checking the distributions of the model parameters

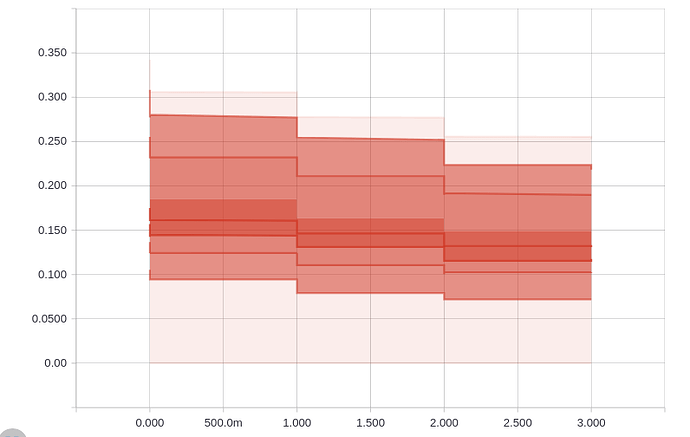

Above are the BN layer’s parameters during training, there exists a cliff at batch 1000, 2000 respectively, where exactly I turn the model to eval mode to compute features, shouldn’t it be smooth?

Any help would be appreciated !