Problem: I’m implementing a GNN in pytorch 1.11 with cuda 113. I tried to set all random seeds using the below code, but still couldn’t get the same result on different epochs. At first, the console told that I used torch_scatter_mean, which is not a deterministic operation. Then I replaced the scatter_mean operation with a 100% deterministic operation, but it still doesn’t work.

Platform I used: windows 10

Training on: cuda:0

seed_ = 42

def seed_torch(seed):

random.seed(seed)

os.environ[‘PYTHONHASHSEED’] = str(seed) # 为了禁止hash随机化,使得实验可复现

os.environ[“CUDA_LAUNCH_BLOCKING”] = “1”

os.environ[“CUBLAS_WORKSPACE_CONFIG”] = “:16:8”

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed) # if you are using multi-GPU.

torch.backends.cudnn.benchmark = False

torch.backends.cudnn.deterministic = True

torch.use_deterministic_algorithms(True)

seed_torch(seed_)

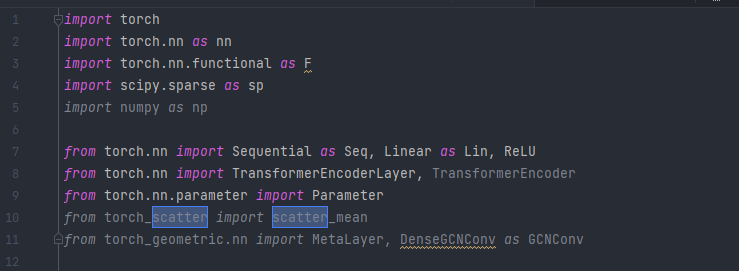

The package I used:

import time

import os

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.data as Data

import random

from sklearn.cluster import KMeans

from torch_geometric.nn import MetaLayer

from model import Model

from dataset import trainDataset, valDataset, testDataset

import argparse

import matplotlib.pyplot as plt

One more question: is it possible dataloader is not deterministic?

train_loader = Data.DataLoader(train_dataset, batch_size=args.batch_size,

shuffle=True, num_workers=0, pin_memory=True)

val_loader = Data.DataLoader(val_dataset, batch_size=args.batch_size,

shuffle=False, num_workers=0, pin_memory=True)

test_loader = Data.DataLoader(test_dataset, batch_size=args.batch_size,

shuffle=False, num_workers=0, pin_memory=True)

I used a TransformerEncoderLayer, so I changed CUBLAS_WORKSPACE_CONFIG to :16:8: