I am using torch.distributed.rpc. I can set the rpc to have many threads using rpc_backend_options, but it seems like it is not being mapped onto idle CPUs that I have.

Specifically, to test out, I’ve sent 1-4 asynchronous RPC calls to a server which has 80 CPUs.

Below is the code for reference.

import os

import time

import torch

import torch.nn as nn

import numpy as np

from torch.multiprocessing import Process

import torch.distributed.rpc as rpc

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.l0 = nn.Linear(2, 2)

W = np.random.normal(0, 1, size=(2,2)).astype(np.float32)

self.l0.weight.data = torch.tensor(W, requires_grad=True)

self.l1 = nn.Linear(2, 2)

W = np.random.normal(0, 1, size=(2,2)).astype(np.float32)

self.l1.weight.data = torch.tensor(W, requires_grad=True)

def forward(self, x):

return self.l1(self.l0(x))

def test(t):

print("RPC called")

for i in range(100000):

t2 = t*1.000001

return t2

def run(i):

rpc.init_rpc("Rank"+str(i), rank=i, world_size=2)

if i == 0:

with torch.autograd.profiler.profile(True, False) as prof:

net = Net()

optimizer = torch.optim.SGD(net.parameters(), lr=0.1)

input = torch.tensor([1.0, 2.0])

reqs = []

reqs.append(rpc.rpc_async("Rank1", test, args=(input,)))

reqs.append(rpc.rpc_async("Rank1", test, args=(input,)))

reqs.append(rpc.rpc_async("Rank1", test, args=(input,)))

reqs.append(rpc.rpc_async("Rank1", test, args=(input,)))

#reqs.append(rpc.rpc_async("Rank1", test, args=(input,)))

for req in reqs:

input += req.wait()

print("RPC Done")

y = net(input)

optimizer.zero_grad()

y.sum().backward()

optimizer.step()

print(prof.key_averages().table(sort_by="cpu_time_total"))

prof.export_chrome_trace("test.json")

else:

pass

rpc.shutdown()

if __name__ == "__main__":

os.environ['MASTER_ADDR'] = "localhost"

os.environ['MASTER_PORT'] = "29500"

ps = []

for i in [0, 1]:

p = Process(target=run, args=(i,))

p.start()

ps.append(p)

for p in ps:

p.join()

As you can see, I am just doing some compute-intensive work on the server using RPC.

Below is the result from my profiler.

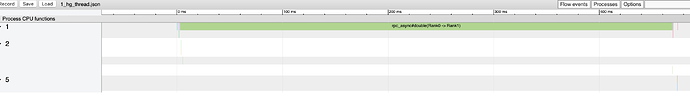

When I do 1 RPC call:

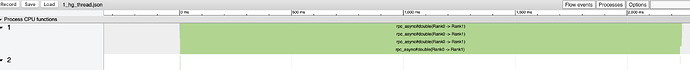

When I do 4 RPC calls:

Default RPC init makes 4 send_recv_threads. So it should be able to “concurrently” run my 4 RPC requests. However, as you can see, the time to finish the RPC requests grew almost linearly (from 460ms to 2200ms) with 4 requests, meaning that they are using only one core and are not being processed in parallel (i.e., concurrent, but not parallel).

I know that python threads (unlike processes) cannot execute in parallel on different cores. Is RPC threads also (because they are threads) cannot run in parallel on different cores?

Is there a way to run different RPC request received on different cores? Or should I manually spawn processes in the receiving server side to run the requests in parallel and leverage my multicore server?

Thank you.