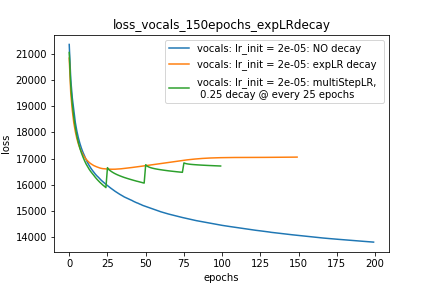

What learning rate decay scheduler should I use with Adam Optimizer?

I’m getting very weird results using MultiStepLR and ExponentialLR decay scheduler.

#scheduler = torch.optim.lr_scheduler.MultiStepLR(optimizer=optimizer, milestones=[25,50,75], gamma=0.95)

scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer=optimizer, gamma=0.25)

optimizer = torch.optim.Adam(lr=m_lr,amsgrad=True, ...........)

for epoch in range(num_epochs):

scheduler.step()

running_loss = 0.0

for i in range(num_train):

train_input_tensor = ..........

train_label_tensor = ..........

optimizer.zero_grad()

pred_label_tensor = model(train_input_tensor)

loss = criterion(pred_label_tensor, train_label_tensor)

loss.backward()

optimizer.step()

running_loss += loss.item()

loss_history[m_lr].append(running_loss/num_train)

Any Help.

Thanks