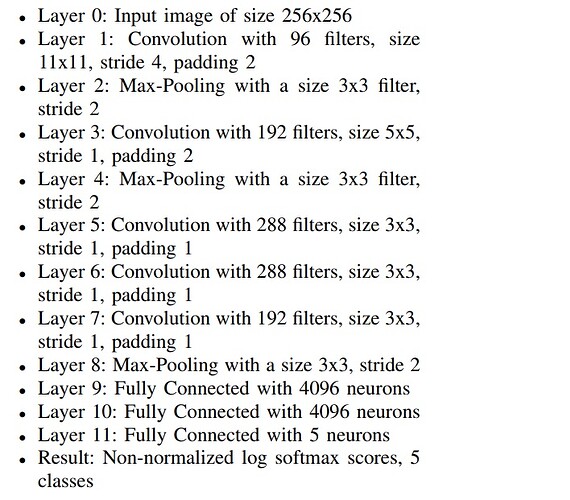

Model specs:

RGB images

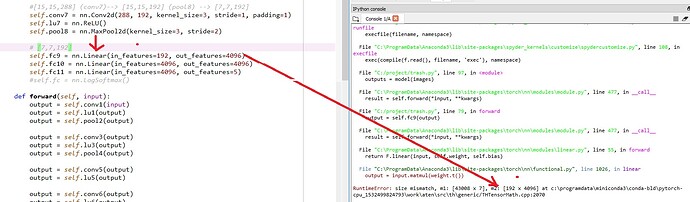

I build:

class Model(nn.Module):

def __init__(self, num_classes=5):

super(Model, self).__init__()

self.conv1 = nn.Conv2d(3, 96, kernel_size=11, stride=4, padding=2)

self.lu1 = nn.ReLU()

self.pool2 = nn.MaxPool2d(kernel_size=3, stride=2)

self.conv3 = nn.Conv2d(96, 192, kernel_size=5, padding=2, stride=1)

self.lu3 = nn.ReLU()

self.pool4 = nn.MaxPool2d(kernel_size=3, stride=2)

self.conv5 = nn.Conv2d(192, 288, kernel_size=3, stride=1, padding=1)

self.lu5 = nn.ReLU()

self.conv6 = nn.Conv2d(288, 288, kernel_size=3, stride=1, padding=1)

self.lu6 = nn.ReLU()

self.conv7 = nn.Conv2d(288, 192, kernel_size=3, stride=1, padding=1)

self.lu7 = nn.ReLU()

self.pool8 = nn.MaxPool2d(kernel_size=3, stride=2)

self.fc9 = nn.Linear(in_features=192, out_features=4096)

self.fc10 = nn.Linear(in_features=4096, out_features=4096)

self.fc11 = nn.Linear(in_features=4096, out_features=5)

self.fc = nn.LogSoftmax()

def forward(self, input):

output = self.conv1(input)

output = self.lu1(output)

output = self.pool2(output)

output = self.conv2d(output)

output = self.lu3(output)

output = self.pool4(output)

output = self.conv5(output)

output = self.lu5(output)

output = self.conv6(output)

output = self.lu6(output)

output = self.conv7(output)

output = self.lu7(output)

output = self.pool8(output)

output = self.fc9(output)

output = self.fc10(output)

output = self.fc11(output)

output = self.fc(output)

return output

I’m newbie, i don’t know this is true/false? please help me. Thank you!