I have been trying to iterate through the items in dataloader for my model, but some reason, an error keeps showing up. After debugging, I realized that the error lies in the iteration of the dataset.

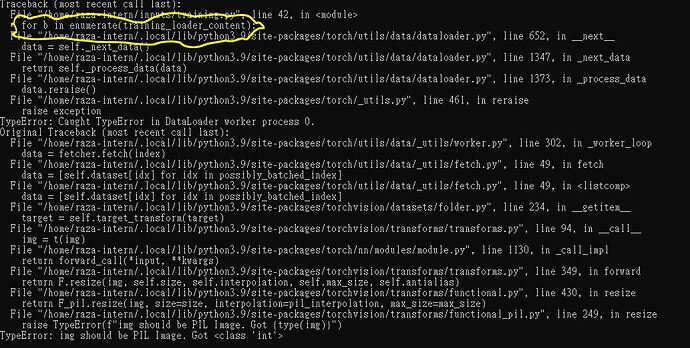

Here’s the error:

Here’s the first part of the code where the dataloader as well as the iteration is included (for debugging purpose):

from __future__ import print_function

import torch.utils

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import torchvision

from PIL import Image

import matplotlib.pyplot as plt

import torchvision.transforms as transforms

import torchvision.models as models

import copy

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# desired size of the output image

imsize = 128 if torch.cuda.is_available() else 128 # use small size if no gpu

#transforms.Grayscale(num_output_channels=1),

transform = transforms.Compose(

[transforms.Resize((128,128)),

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))])

target_transform = transforms.Compose(

#transforms.ToPILImage(),

[transforms.Resize((128,128)),

transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))])

#training stuff

training_set_style = torchvision.datasets.ImageFolder('./style', transform=transform, target_transform=target_transform)

training_set_content = torchvision.datasets.ImageFolder('./content', transform=transform, target_transform=target_transform)

training_loader_style = torch.utils.data.DataLoader(training_set_style, batch_size=4, shuffle=True,num_workers=2)

training_loader_content = torch.utils.data.DataLoader(training_set_content, batch_size=4, shuffle=True,num_workers=2)

for b in enumerate(training_loader_content):

print(b)

Mainly, I wish to iterate through the dataloader in this loop:

if name in content_layers:

for batch in training_loader_content:

# add content loss:

content_image = batch[0].to(device)

print(content_image.shape)

target = model(content_image).detach()

content_loss = ContentLoss(target)

model.add_module("content_loss_{}".format(i), content_loss)

content_losses.append(content_loss)

Can somebody help me out?