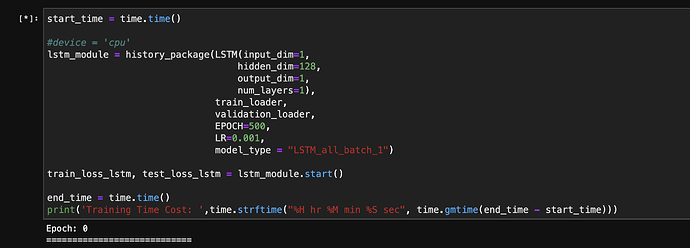

Hello, below is a model LSTM. I tried to run the training process, but it kept stuck at EPOCH = 0. This problem doesn’t exist when I smaller down the size of the training data.

Now the size of each phase of data:

- training data: torch.Size([1426233, 110, 1]) torch.Size([1426233, 1])

- validation data: torch.Size([250571, 110, 1]) torch.Size([250571, 1])

- testing data: torch.Size([190521, 110, 1]) torch.Size([190521, 1])

Below is the model architecture of LSTM:

# Build model

#####################

input_dim = 1

hidden_dim = 128

num_layers = 1

output_dim = 1

# Here we define our model as a class

class LSTM(nn.Module):

def __init__(self, input_dim, hidden_dim, num_layers, output_dim):

super(LSTM, self).__init__()

self.hidden_dim = hidden_dim

self.num_layers = num_layers

self.lstm = nn.LSTM(input_dim, hidden_dim, num_layers, batch_first=True)

self.fc = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

out, _ = self.lstm(x, None)

out = self.fc(out[:, -1, :])

return out

and the training/testing class:

class history_package():

def __init__(self, neural_net, train_loader, test_loader, EPOCH, LR, model_type):

self.net = neural_net

self.optimizer = torch.optim.Adam(neural_net.parameters(), lr = LR)

self.criterion = nn.MSELoss()

self.train_loader = train_loader

self.test_loader = test_loader

self.EPOCH_ = EPOCH

self.LR_ = LR

self.net = self.net.to(device)

self.model_type = model_type

if device == 'cuda':

torch.backends.cudnn.benchmark = True

def start(self):

train_history_loss = []

test_history_loss = []

for epoch in range(self.EPOCH_):

print('Epoch:', epoch)

print("============================")

train_loss = self.train()

test_loss = self.test()

train_history_loss.append(train_loss)

test_history_loss.append(test_loss)

return train_history_loss, test_history_loss

def train(self):

self.net.train()

train_loss = 0

for step, (batch_X, batch_y) in enumerate(self.train_loader):

batch_X, batch_y = batch_X.to(device), batch_y.to(device)

self.optimizer.zero_grad()

outputs = self.net(batch_X)

#print(outputs.shape)

#print(batch_y.shape)

loss = self.criterion(outputs, batch_y)

loss.backward()

self.optimizer.step()

train_loss += loss.item()

print('【Training】Loss: %.3f' % (train_loss))

# save the model

if self.model_type == "RNN":

torch.save(self.net.state_dict(), 'RNN_model.pth')

if self.model_type == "LSTM":

torch.save(self.net.state_dict(), 'LSTM_model.pth')

if self.model_type == "GRU":

torch.save(self.net.state_dict(), 'GRU_model.pth')

if self.model_type == "LSTM_all_batch_1":

torch.save(self.net.state_dict(), 'LSTM_all_batch_1.pth')

return train_loss

def test(self):

self.net.eval()

test_loss = 0

with torch.no_grad():

for step, (batch_X, batch_y) in enumerate(self.test_loader):

batch_X, batch_y = batch_X.to(device), batch_y.to(device)

outputs = self.net(batch_X)

loss = self.criterion(outputs, batch_y)

test_loss += loss.item()

print('【Validation】Loss: %.3f' % (test_loss))

return test_loss

Should I increase the batch size in the training / validation loader in order to solve this problem? Now I use batch size = 1 for both training and validation loader. Or should I adjust the model architecture in order to accommodate large input size of data?