import torch

import numpy as np

import torch

torch.manual_seed(42)

from torch import nn

from tqdm import tqdm

class Practice_1_3(nn.Module):

def __init__(self):

super().__init__()

self.first_linear = torch.nn.Linear(2,2)

self.second_linear = torch.nn.Linear(2,1)

# torch.nn.init.constant_(self.second_linear.bias, -0.5)

self.activation = nn.Sigmoid()

def forward(self, x):

Z1 = self.first_linear(x)

A1 = self.activation(Z1)

Z2 = self.second_linear(A1)

A2= self.activation(Z2)

return A2

def train(model,x,y,num_samples):

loss_fun = nn.BCELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=0.01)

model.train()

model.to("cuda:0")

x=x.to("cuda:0")

y=y.to("cuda:0")

for k in tqdm(range(5000)):

output = model(x)

model.zero_grad()

print(output[:100])

predict = [1 if output[k] >0.5 else 0 for k in range(num_samples)]

total_loss = loss_fun(output, y)

total_loss.backward()

optimizer.step()

return model

def make_data(num_samples):

# Making data

x1_train=[]

x2_train=[]

y_train=[]

for i in range(num_samples):

x1_train.append(random.uniform(-10, 10))

x2_train.append(random.uniform(-10, 10))

if (x1_train[i]< -5 or x1_train[i]>5):

y_train.append(1)

else:

y_train.append(0)

x1_train = torch.tensor(x1_train).unsqueeze(dim=-1)

x2_train = torch.tensor(x2_train).unsqueeze(dim=-1)

x_train = torch.concat((x1_train, x2_train), dim = 1)

y_train = torch.tensor(y_train, dtype=torch.float32).unsqueeze(dim=-1)

return x_train, y_train

def main():

num_train_samples = 10000

x_train, y_train = make_data(num_train_samples)

model = Practice_1_3()

best_model=train(model, x_train, y_train, num_train_samples)

if __name__ == "__main__":

main()

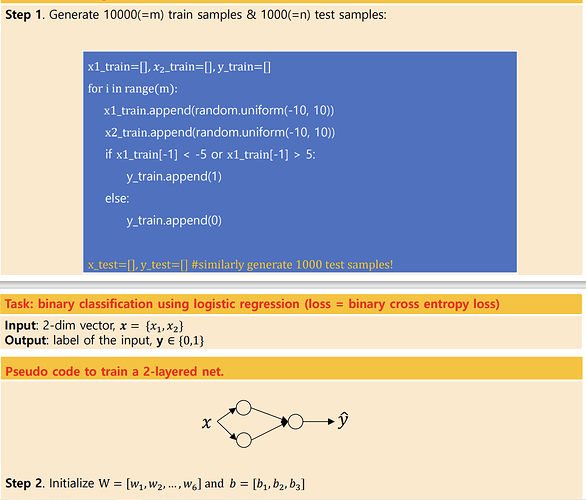

This is very simple code for the picture below

I implemented it with pytorch.

But, for some reason, this code cannot train the model properly.

the accuracy score remains still 0.5 after finishing training.

I printed some values.

And I found that after passing through the sigmoid function, especially A2 in my code, output values are biased to 1, which makes all the predicted values end up with 1, leading the accuracy score to 0.5

My lecturer told me that this problem is solvable only with this simple model.

I’ve been spending lots of hours trying to fix it… but I failed.

Is there anyone who knows why this model cannot be trained.