Hello, I am a student, working on an extra project that my professor gave me which is to convert the pytracking framework tomp101 algorithm to TensorRT.

Now I have successfully converted it to ONNX, but when converting to TensorRT, I get a warning that some int64 are clamped to int32. When running inference on Tensorrt, the accuracy is terrible.

When running my model through:

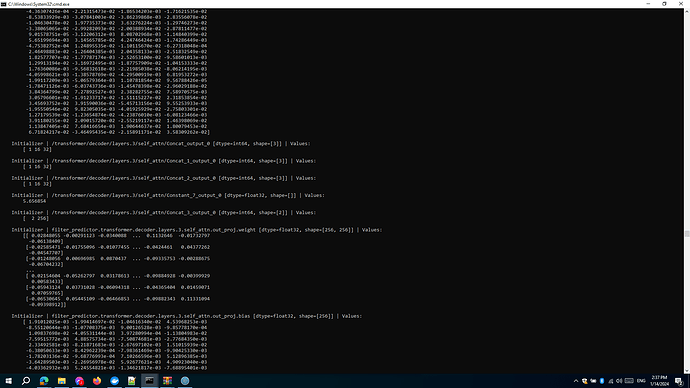

polygraphy inspect model tomp101_head_latest3.onnx --show layers attrs weights

I see that half the model is in int64, so this explains why clamping produces such low accuracy

Anybody with more experience, can you please tell me how should I go about this issue? The good thing is that the pytracking framework has made it easy to retrain the model I we decided to do so.

So do I just retrain the whole model and make sure that int32 is used instead?

As far as I can see all the weights are in float32, but other values, which I don’t know are int64

like I mentioned, the model is pretty large, and as I can see a lot of it uses int64, so do I have to modify the pytorch code line by line, or can everything be done more easily?

If any more information, or the program code is required, please let me know.

THANK YOU!!!

P.S. If I try to run the model in onnx runtime, I get this error:

onnxruntime.capi.onnxruntime_pybind11_state.RuntimeException: [ONNXRuntimeError] : 6 : RUNTIME_EXCEPTION : Non-zero status code returned while running Reshape node. Name:'/Reshape_7' Status Message: /onnxruntime_src/onnxruntime/core/providers/cpu/tensor/reshape_helper.h:28 onnxruntime::ReshapeHelper::ReshapeHelper(const onnxruntime::TensorShape&, onnxruntime::TensorShapeVector&, bool) i < input_shape.NumDimensions() was false. The dimension with value zero exceeds the dimension size of the input tensor

As I understand (correct me if I’m wrong) a tensor of size 0 somehow gets created here, maybe this is bad? Or for TensorRT this doesn’t matter?