I am implementing a active machine learning object detection pipeline with pytorch inside a jupyter notebook. I am using fasterRCNN, COCO annotations, SGD optimizer and GPU training.

To ensure determinism i try to run one epoch of training two times and receive different losses by the end of both. The loss after the first step is always the same, so initialization is not the problem.

What i already tried:

- i made sure the order of images fed are in the same order

- jupyter kernel restartet between training runs

- batch_size = 1, num_workers = 1, disabled augmentation

- CPU training is deterministic(!)

- the following seeds are set:

– seed_number = 2

– torch.backends.cudnn.deterministic = True

– torch.backends.cudnn.benchmark = False

– random.seed(seed_number)

– torch.manual_seed(seed_number)

– torch.cuda.manual_seed(seed_number)

– np.random.seed(seed_number)

– os.environ[‘PYTHONHASHSEED’]=str(seed_number)

Here is a link to the primary code for the training:

my code inside a colab

(its not functional, since i just copied it out of my local jupyter but shows what i am trying to do)

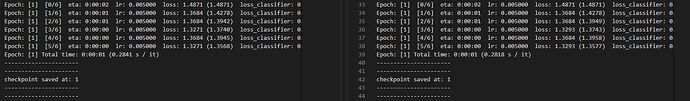

The training log for two identical runs look like this:

Please let me know if there is any additional information needed