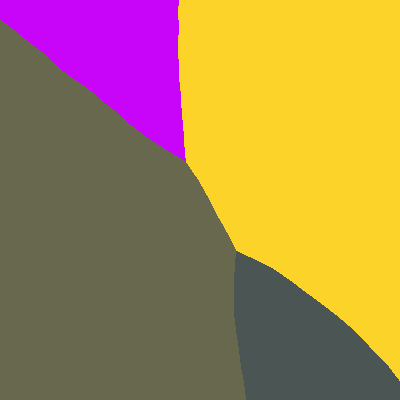

Hey. So as part of a bigger project, I’m trying to train a network to estimate a function that takes as input an (x,y) pair, and returns the class of that pair (i,e., I’m overfitting my network to be an image). I’m talking about simple images, stuff like:

However, the network seems to train extremely slowly. After seeing around 2000000 (x,y) pairs, it outputs stuff like

Any idea why? This also seen in the loss that changes very slowly. The essential part of my code looks like that:

class MLPClassifier(torch.nn.Module):

def __init__(self, input_size: int, output_size: int):

super(MLPClassifier, self).__init__()

self.input_size = input_size

self.output_size = output_size

self.config = network_config

self.hidden_layers = [torch.nn.Linear(input_size, 256] + [

torch.nn.Linear(256, 256) for i in range(7)

]

self.predict = torch.nn.Linear(256, output_size)

def forward(self, x):

for layer in self.hidden_layers:

x = F.relu(layer(x))

x = self.predict(x) # linear output

return x

def train(network, optimizer, loss_function, data_generator, embedder, config, output_dir):

iterations = config['iterations']

batch_size = config['network_config']['batch_size']

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

network.train()

for iteration in tqdm.tqdm(range(iterations)):

x, y = data_generator.get_batch(batch_size)

x, y = torch.tensor(x.astype('float32')), torch.tensor(y.astype('float32'))

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

prediction = network(x)

loss = loss_function(prediction, y)

loss.backward()

optimizer.step()

return batch_losses

def run_test(config, output_dir):

data_generator = data.DataGenerator(config['problem_configuration'])

network = model.MLPClassifier(input_size=2, output_size=20)

loss_function = torch.nn.MSELoss()

optimizer = torch.optim.SGD(network.parameters(), lr=0.2, momentum=0.95)

return train(network, optimizer, loss_function, data_generator, embedder, config, output_dir)

Any help will be much appreciated, thanks!