I use pytorch to train but I can’t use full of my computer performance.

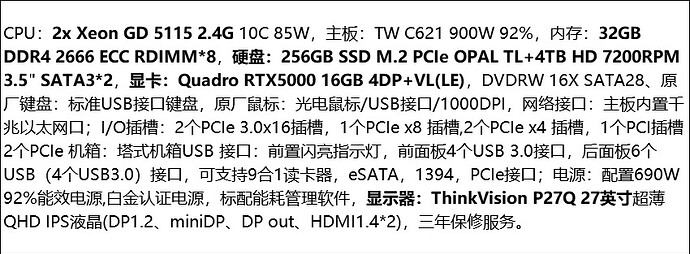

My computer configuration is:

I try to use pytorch to test a neural network. My input and output image size is 6886881.

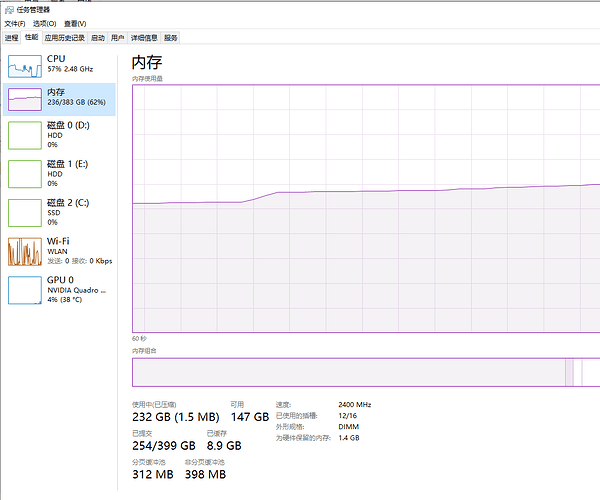

When I use CPU to train, it seems it can use multi CPUs. The batch size can be set up to 128.

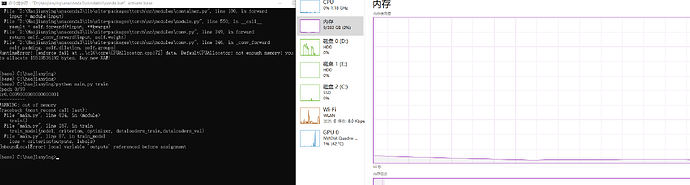

But when I use GPU to train. The batch size can only be set to 4. If I use larger batch size, there will be error ‘Out of membery.’ I seems only one cpu is used.

I use this code to use CUDA:

device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”)

inputs = x.to(device)

labels = y.to(device)

model = Unet(1, 1).to(device)

What should I do to use the full CPUs when I use CUDA? Do I need to do some extra setup?