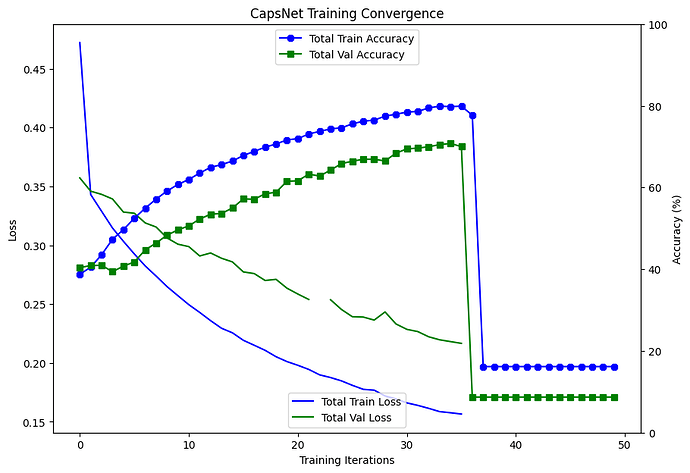

Hi @ptrblck , would you mind checking this code in my github. It’s about Capsule Networks. It has four labels. I am facing some NaN values in the loss function or zeroes output of the model at the middle of the training. I dont know if it a problem of vanishing or exploding gradients

Print Statement:

Validation Loss decreased (0.2539 → nan). Saving Checkpoint Model …

Training [46%] Train Loss:0.1899 Val Loss:nan Train Accuracy:73.73% Val Accuracy:62.80%

This is the architecture:

Layer (type:depth-idx) Output Shape Param #

CapsNet [128, 4, 16, 1] –

├─ConvLayer: 1-1 [128, 256, 24, 24] –

│ └─Conv2d: 2-1 [128, 256, 24, 24] 20,992

├─PrimaryCaps: 1-2 [128, 2048, 8] –

│ └─ModuleList: 2-2 – –

│ │ └─Conv2d: 3-1 [128, 32, 8, 8] 663,584

│ │ └─Conv2d: 3-2 [128, 32, 8, 8] 663,584

│ │ └─Conv2d: 3-3 [128, 32, 8, 8] 663,584

│ │ └─Conv2d: 3-4 [128, 32, 8, 8] 663,584

│ │ └─Conv2d: 3-5 [128, 32, 8, 8] 663,584

│ │ └─Conv2d: 3-6 [128, 32, 8, 8] 663,584

│ │ └─Conv2d: 3-7 [128, 32, 8, 8] 663,584

│ │ └─Conv2d: 3-8 [128, 32, 8, 8] 663,584

├─DigitCaps: 1-3 [128, 4, 16, 1] 1,048,576

├─Decoder: 1-4 [128, 1, 32, 32] –

│ └─Linear: 2-3 [128, 1024] 66,560

│ └─Linear: 2-4 [128, 2048] 2,099,200

│ └─Linear: 2-5 [128, 1024] 2,098,176

│ └─Sigmoid: 2-6 [128, 1024] –

Total params: 10,642,176

Trainable params: 10,642,176

Non-trainable params: 0

Total mult-adds (Units.GIGABYTES): 45.58

Input size (MB): 0.52

Forward/backward pass size (MB): 172.03

Params size (MB): 42.57

Estimated Total Size (MB): 215.13

Mi link to github is: https://github.com/contepablod/QCapsNet

Thanks!