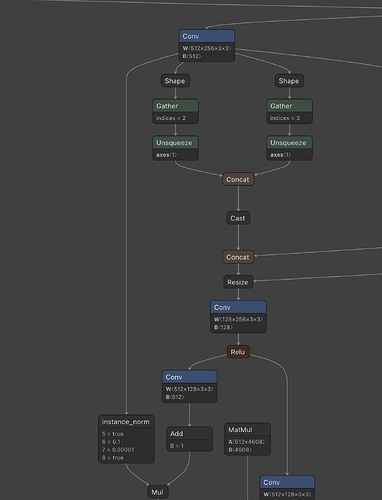

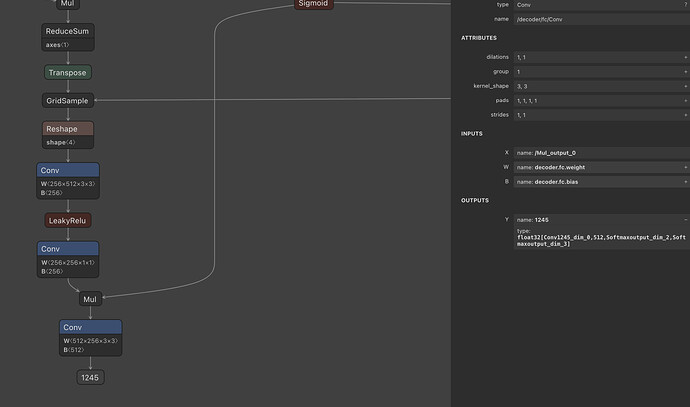

I am trying to convert this type of code to onnx format.

def __init__(self):

...

self.conv = nn.Conv2d(c_in, c_out, 3, 1, 1)

self.norm = nn.InstanceNorm2d(c_out)

...

def forward(self, x):

...

x = self. norm(self. conv(x))

...

Of course, I think that the result of self.conv(x) should have the form of (*, c_out, *, *).

However, this model gives the following error.

torch.onnx.errors.SymbolicValueError: Unsupported: ONNX export of instance_norm for unknown channel size.

Shouldn’t the channel be confirmed the moment self.conv is passed, no matter how other operations before it are configured? However, torch.onnx.export judges the output passed through self.conv as Float(*, *, *, *). How to solve it?